What are Azure Native Services?

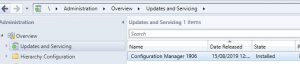

Azure Native Services Overview.

Azure native services are cloud-based solutions that are developed, managed, and supported by Microsoft. These services are designed to help organizations build and deploy applications on the Azure cloud platform, and take advantage of the scalability, security, and reliability of the Azure infrastructure. In this blog post, we’ll take a look at some of the key Azure native services that are available, and how they can be used to build and run cloud-based applications.

What are the native services in Azure?

Azure Virtual Machines Overview.

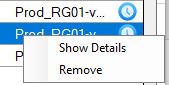

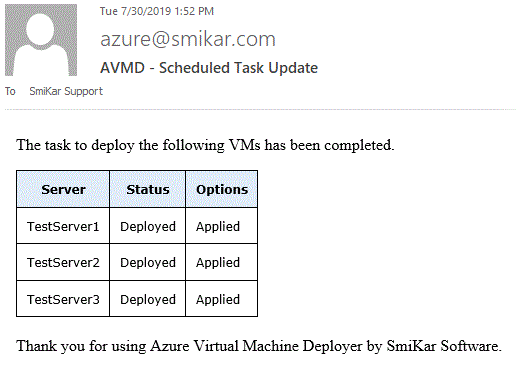

Azure Virtual Machines (VMs): Azure VMs allow you to create and manage virtual machines in the Azure cloud. You can choose from a variety of VM sizes and configurations, and you can use your own images or choose from a wide range of pre-configured images that are available in the Azure Marketplace. Azure VMs are a cost-effective way to run a wide range of workloads in the cloud, including web servers, databases, and applications.

Azure Virtual Machines (VMs) are a service provided by Microsoft Azure that allow users to create and run virtual machines in the cloud. VMs are based on a variety of operating systems and can be used for a wide range of workloads, including running applications, hosting websites, and performing data processing tasks.

With Azure VMs, users can quickly spin up a new VM, choosing from a variety of pre-configured virtual machine images or creating their own custom image. Users also have the ability to scale the resources of a VM (such as CPU and memory) up or down as needed, and can also create multiple VMs in a virtual network to build a scalable and fault-tolerant solution.

Azure VMs also provide an additional layer of security by allowing to apply security policies, firewall and also integrate with Azure AD for identity-based access to the VMs.

Additionally, Azure VMs can be combined with other Azure services, such as Azure Storage and Azure SQL Database, to create a complete and highly-available solution for running applications and storing data in the cloud.

Azure Kubernetes Service Overview.

Azure Kubernetes Service (AKS): AKS is a fully managed Kubernetes service that makes it easy to deploy, scale, and manage containerized applications. With AKS, you can deploy and run containerized applications on Azure with just a few clicks, and you can scale your deployments up or down as needed to meet changing demand. AKS is a great choice for organizations that are looking to build cloud-native applications that are scalable, resilient, and easy to manage.

AKS makes it easy to deploy and manage a Kubernetes cluster on Azure, allowing developers to focus on their applications, rather than the underlying infrastructure.

AKS is built on top of the Kubernetes open-source container orchestration system and enables users to quickly create and manage a cluster of virtual machines that run containerized applications.

With AKS, users can easily deploy and scale their containerized applications and services, and can also take advantage of built-in Kubernetes features such as automatic scaling, self-healing, and rolling updates. Additionally, it allows you to monitor and troubleshoot the kubernetes clusters with the help of Azure Monitor and log analytics.

AKS also integrate well with other Azure services, such as Azure Container Registry, Azure Monitor and Azure Active Directory to provide a complete solution for managing containerized applications in the cloud. Additionally, AKS supports Azure DevOps and other CI/CD tools.

By using AKS, organizations can benefit from the flexibility and scalability of containers, and can also take advantage of Azure’s global network of data centers and worldwide compliance certifications to build and deploy applications with confidence.

Azure Functions Overview.

Azure Functions: Azure Functions is a serverless compute service that allows you to run code in response to specific triggers, such as a change in data or a request from another service. Azure Functions is a great way to build and deploy microservices, and it’s especially useful for organizations that need to process large volumes of data or perform tasks on a regular schedule.

Azure Functions is a serverless compute service provided by Microsoft Azure that allows developers to run event-triggered code in the cloud without having to provision or manage any underlying infrastructure.

Azure Functions allows developers to write code in a variety of languages, including C#, JavaScript, F#, Python, and more, and can be triggered by a wide range of events, including HTTP requests, messages in a queue, or changes in data stored in Azure. Once an Azure Function is triggered, it is executed in an ephemeral container, meaning that the developer does not need to worry about the underlying infrastructure or scaling.

Azure functions are designed to be small, single-purpose functions that respond to specific events, they can also integrate with other Azure services and connectors, to create a end-to-end data processing and workflow pipelines.

Azure Functions provide an efficient and cost-effective way to run and scale code in the cloud. Because Azure automatically provisions and scales the underlying infrastructure, it can be a cost-effective option for running infrequently used or unpredictable workloads. Additionally, Azure functions can be hosted in Consumption Plan, App Service Plan or as a Kubernetes pod, this provide more flexibility and options for production workloads.

Overall, Azure Functions is a powerful, serverless compute service that enables developers to build and run event-driven code in the cloud, without having to worry about the underlying infrastructure.

Azure SQL Database Overview.

Azure SQL Database is a fully managed relational database service provided by Microsoft Azure for deploying and managing structured data in the cloud. Azure SQL Database is built on top of Microsoft SQL Server and is designed to make it easy for developers to create and manage relational databases in the cloud without having to worry about infrastructure or scaling.

Azure SQL Database supports a wide range of data types and provides robust security features, such as transparent data encryption and advanced threat protection. Additionally, it provides built-in High availability and disaster recovery options which eliminates the need to setup and configure on-premises infrastructure.

With Azure SQL Database, developers can quickly and easily create a new database and start working with data, while the service automatically manages the underlying infrastructure and scaling. Additionally, it provides rich set of tools for monitoring, troubleshooting and performance tuning the databases.

Azure SQL Database also provides a number of options for deploying and managing databases, including single databases, elastic pools and Managed Instance. Single databases and elastic pools are ideal for smaller workloads with predictable traffic patterns and Managed Instance is suitable for larger and more complex workloads which needs more control over the infrastructure.

Azure SQL Database can be integrated with other Azure services, such as Azure Data Factory and Azure Machine Learning, to create a complete data platform for building and deploying cloud-based applications and services.

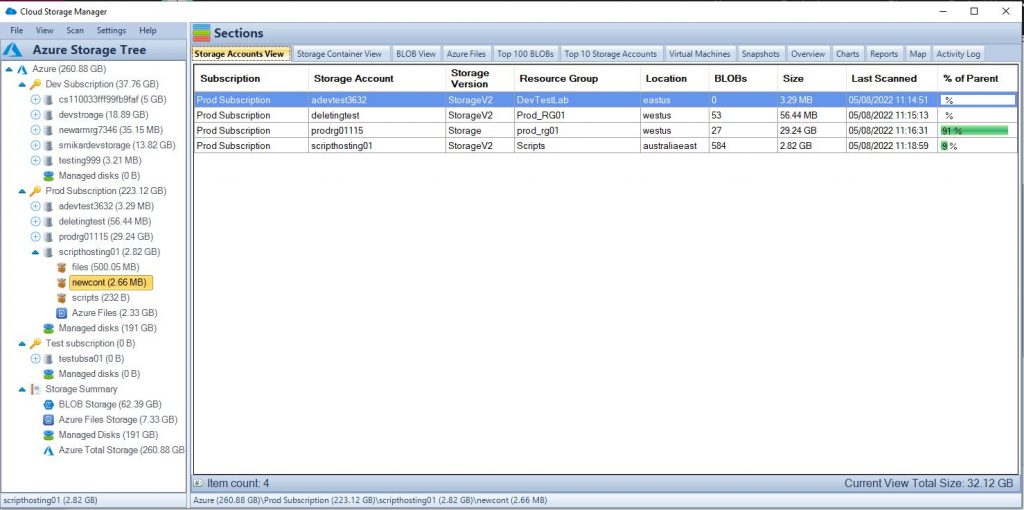

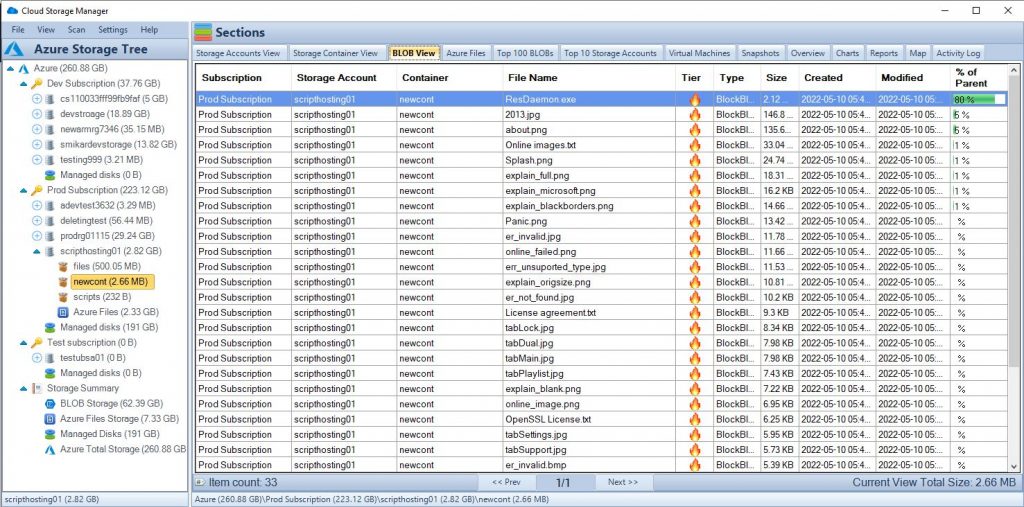

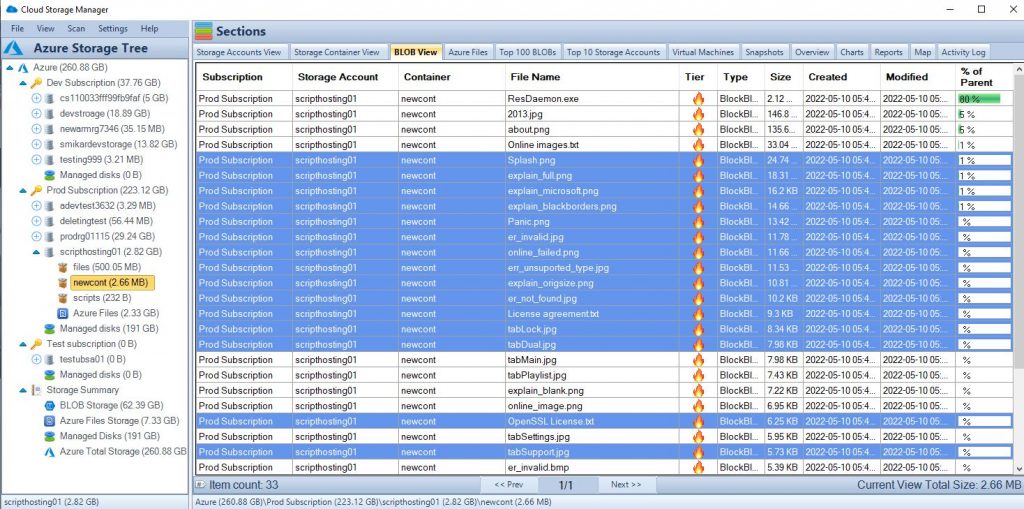

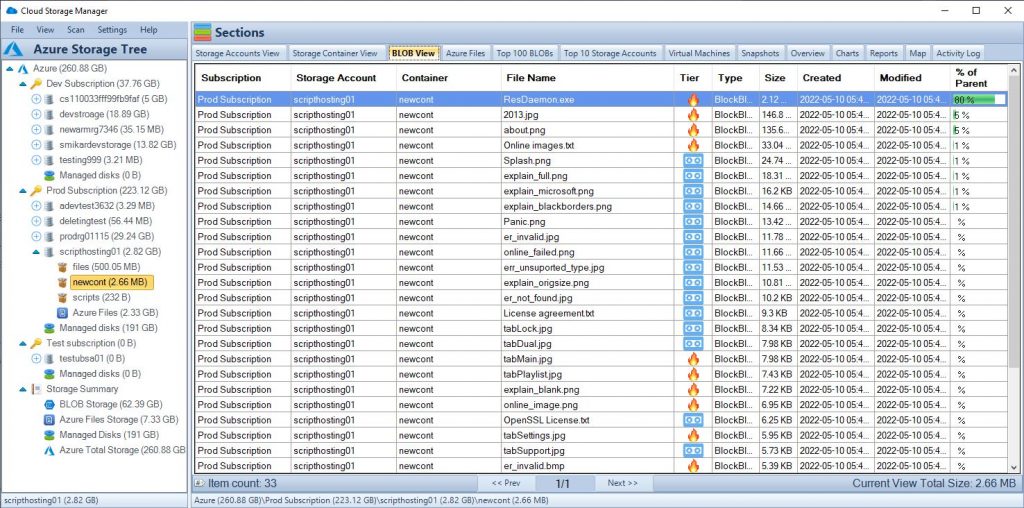

Azure Storage Overview.

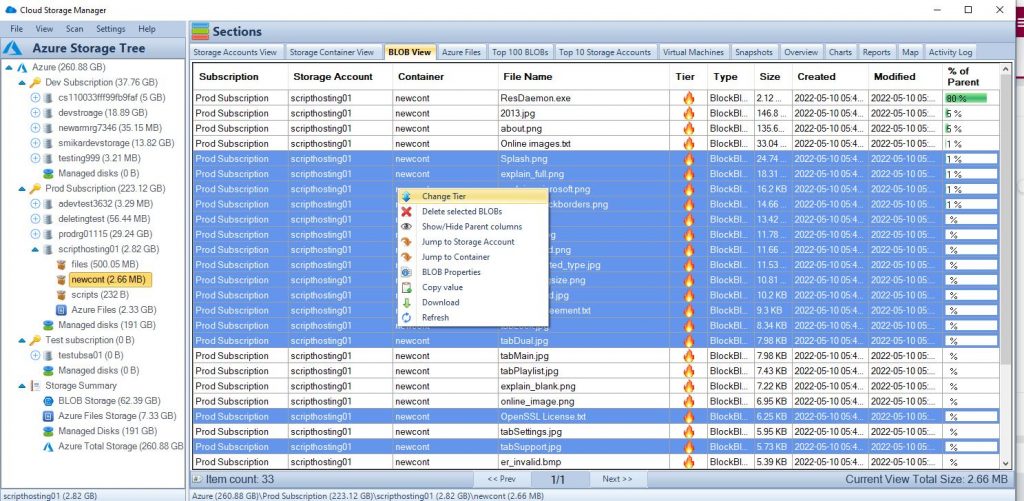

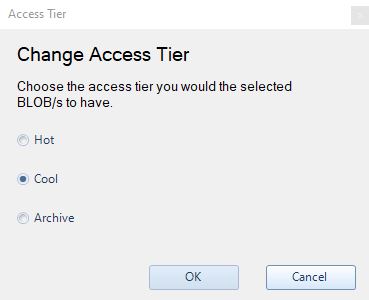

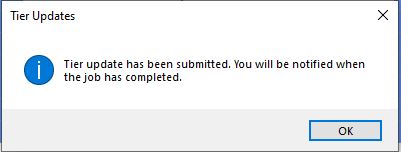

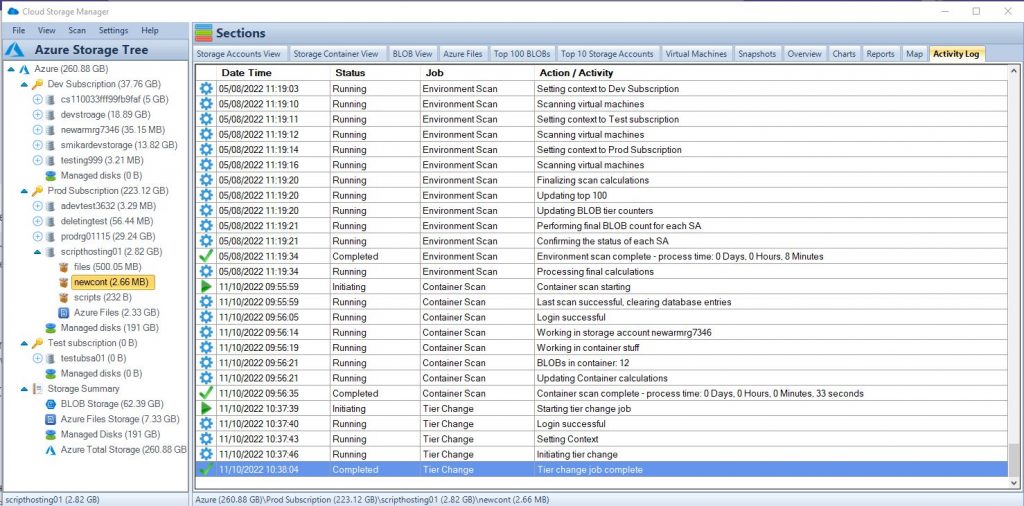

Azure Storage is a cloud-based service provided by Microsoft Azure for storing and managing unstructured data, such as binary files, text files, and media files. Azure Storage includes several different storage options, including Azure Blob storage, Azure File storage, Azure Queue storage, and Azure Table storage.

Azure Blob storage is designed for unstructured data and is optimized for storing large amounts of unstructured data, such as text or binary data. It allows to store and access massive amounts of unstructured data, such as text or binary data, and serves as the foundation of many other Azure services, including Azure Backup, Azure Media Services and Azure Machine Learning.

Azure File storage is a service that allows you to create file shares in the cloud, accessible from any SMB 3.0 compliant client. This can be useful for scenarios where you have legacy applications that need to read and write files to a file share.

Azure Queue storage is a service for storing and retrieving messages in a queue, used to exchange messages between components of a distributed application.

Azure Table storage is a service for storing and querying structured NoSQL data in the form of a key-value store.

All these services allows you to store and retrieve data in the cloud using standard REST and SDK APIs, and they can be accessed from anywhere in the world via HTTP or HTTPS.

Azure Storage also provides built-in redundancy and automatically replicates data to ensure that it is always available, even in the event of an outage. It also provides automatic load balancing and offers built-in data protection, data archiving, and data retention options.

With Azure Storage, developers can easily and cost-effectively store and manage large amounts of unstructured data in the cloud, and take advantage of Azure’s global network of data centers and worldwide compliance certifications to build and deploy applications with confidence.

Azure Networking Overview.

Azure Networking is a set of services provided by Microsoft Azure for creating, configuring, and managing virtual networks, or VNet, in the cloud. Azure Networking allows users to connect Azure resources and on-premises resources together in a secure and scalable manner.

With Azure Virtual Network, you can create a virtual representation of your own network in the Azure cloud, and define subnets, private IP addresses, security policies, and routing rules for those subnets. Virtual Network allows you to create fully isolated network environment in Azure, this includes ability to host your own IP addresses, and also use your own domain name system (DNS) servers.

Azure ExpressRoute enables you to create private connections between Azure data centers and infrastructure that’s on your premises or in a colocation facility. ExpressRoute connections don’t go over the public internet, and they offer more reliability, faster speeds, and lower latencies than typical connections over the internet.

Azure VPN Gateway allows you to create secure, cross-premises connections to your virtual network from anywhere in the world. You can use VPN gateways to establish secure connections to your virtual network from other virtual networks in Azure, or from on-premises locations.

Azure Load Balancer distributes incoming traffic among multiple virtual machines, ensuring that no single virtual machine is overwhelmed by too much traffic. Load Balancer supports external or internal traffic, also it is agnostic to the underlying protocols.

Azure Network Security Group allows you to apply network-level security to your Azure resources by creating security rules to control inbound and outbound traffic.

Overall, Azure Networking services provide a comprehensive set of tools for creating, configuring, and managing virtual networks in the cloud, and allows organizations to securely connect their Azure resources with on-premises resources. It also provides a set of security features to protect the resources in the network.

Azure Native Services Conclusion.

In conclusion, Azure native services provide a powerful and flexible platform for building and running cloud-based applications. Whether you’re looking to create a new application from scratch, or you’re looking to migrate an existing application to the cloud, Azure has a range of native services that can help you achieve your goals. By using Azure native services, you can take advantage of the scalability, security, and reliability of the Azure infrastructure, and you can build and deploy applications that are designed to meet the needs of your organization.