Is Disaster Recovery Really Worth The Trouble

(Part 2 of 4 part series)

Guest Post by Tommy Tang – Cloud Evangelist

In the previous article I’ve mentioned Architecture is the foundation, the bedrock, for implementing Disaster Recovery (DR), and it must be part of the broader discussion on system resilience and availability otherwise funding will be hard to come by. You may ask what are the key design criteria for DR? I believe, first and foremost, the design must be ‘Fit for Purpose’. In other words you’d need to understand what the customer wants in terms of requirement, objective and expected outcome. The following technical jargons are commonly used to measure DR capability and I will provide a brief explanation for each metric.

Recovery Time Objective (RTO)

- It is the targeted time duration of which a service or system needs to be restored during Disaster Recovery. Typically RTO is measured in hours or days and it’s no surprise to find human ‘think’ time often exacerbates the recovery time. RTO should be tightly aligned with the Business Continuity requirement (i.e. Maximum Acceptable Outage MAO) given system recovery is only one aspect of the business service restoration process.

Recovery Point Objective (RPO)

- It is the maximum targeted time of which data or transaction might be lost without recovery. You can view RPO as the maximum data loss that you can afford. So ‘Zero RPO’ is interpreted as no data loss is permitted. Not even a second. The actual amount of data loss is very much dependent on the affected system. For example, an online stock trading system that suffers a 5-minute data loss could result hundreds of lost transaction worth millions of dollars. Conversely, an in-house Human Resource (HR) system is unlikely to suffer any data loss for the same 5-minute interval given changes to HR system are scarce.

Mean Time To Recovery (MTTR)

- It is the average time taken for a device, component or service to recover from failure after being detected. Unlike RTO and RPO, MTTR includes the element of monitoring and detection, and it’s not limited to DR event but any failure scenario. When you’re designing the appropriate DR solution for your customer, MTTR must be vigorously scrutinised for each software & hardware component in order to meet the targeted RTO.

Let’s move over to the business side of the DR coin and see how these metrics are being applied. I think it is a safe bet to assume each business service would have already been assigned to the predetermined service criticality classification, and each classification must have included RTO and RPO requirement. For illustration purposes let say “Category A” service is a highly critical customer portal so it might have 2-hour RTO and Zero RPO requirement, and for “Category C” internal timesheet service it could have RTO set to 12-hour with 1-hour RPO.

In a real DR event (or DR exercise) the classification is used to determine the order in which a service is being restored. It is neither practical or sensible to have all services weighed equally, or have too many services that are rated critical given the limited resources and immense pressure being exerted during DR. The right balance must be sought and agreed upon by all business owners.

Now you have the basic understanding of the DR requirement and keen to get started. Hold off launching the Microsoft Visio app and start drawing those beautiful boxes just yet. I’d like to share with you the one simple resilience design principle which I have been using, and that is to eliminate “Single Point of Failure”. By the virtue of having 2 working and functionally identical components in the system you’d improve resilience by 100%! The 2x system is now capable of handling single component failure without loss of service. The “Single Point of Failure” principle does apply to physical data centre and therefore it is very much relevant to DR design.

As an IT architect you have a number of tried and proven solutions (aka architecture patterns) available in the toolkit at your disposal. The DR patterns described below are commonly found in most organisations.

Active-Active

The definition of Active-Active DR pattern is to provision two or more active working software components that spread across 2 data centres. E.g. A N-tier system architecture may consist of 2x Web servers, 2x Application servers and 2x Database servers. Client connection and application workload is distributed between 2 sites, either equally weighed or otherwise, via Global DNS Service or Global Traffic Manager (GTM). The primary objective of the Active-Active DR design is to eliminate data centre as single point of failure. Under this design there is no need to initiate failover during Disaster Recovery because an identical system is already running at the alternate site and sharing the application workload. (E.g. Zero RTO)

The Active-Active pattern is best suited for critical system or service (i.e. Category A) because of the high cost and complexity associated with implementing distributed system. Not every application is capable of running in a distributed environment across 2 sites. The reason could be attributed to software limitation like Global Sequencing or Two-Phase Commit. It’s highly desirable to have formulated a prescriptive Active-Active design pattern to help mitigate the inherited cost and risks, and to align with the existing technology investment and future roadmap.

The biggest challenge is often encountered at the database tier. Are you able to run the database simultaneously across 2 sites? If so, is the data being replicated synchronously or asynchronously? Designing a fully distributed database solution with zero data loss (i.e. Zero RPO) is not trivial. Obviously you can choose to implement a partial Active-Active solution where every component except the database is active across 2 sites. Alternatively, you may want to relax the RPO requirement to allow non-zero value so asynchronous data replication can be applied. (E.g. 5-minute RPO)

From general observation I’ve found critical system database is typically configured with a warm standby DB with Zero RPO, where failover operation can be manually initiated or automated. The warm standby DB configuration is also known as Active-Passive DR pattern of which is going to be explored further in the next section.

Recently I’ve heard a story about Disaster Recovery. A service owner proclaimed the targeted system is fully Active-Active across 2 sites during the DR exercise and therefore no failover is ever required. 30 minutes later the same service owner, with much reluctance, scrambled to contact the DBA team requesting an urgent Oracle DB failover to the DR site. A word of advice: many supposed to be Active-Active implementations are only truely Active-Active up to the database tier so it does pay to understand your system design. A one page high-level system architecture diagram with network flow should be suffice to summarise the DR capability without confusion.

Active-Passive

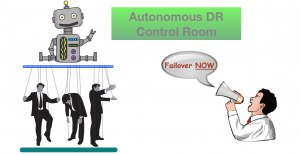

The Active-Passive DR pattern stipulates that there is one or more redundant software components configured in warm standby mode at the alternate data centre. DR failover operation can be either manually initiated or automated for each component in the predetermined order for the respective application stack. Client connection and application workload will be redirected to the active live data centre via Global DNS Service or Global Traffic Manager (GTM). Remember the key differentiator from Active-Active DR pattern is only one active site can accept and process workload while the passive site is in dormant.

The primary objective of the Active-Passive design is, same as Active-Active, to eliminate data centre as single point of failure but albeit with higher RTO value. Time required to failover will vary and is dependent on the underlying design and technology deployed for the corresponding software component. Component failover can typically take 5 to 30 minutes (or even longer) to complete. Therefore the aggregated component failover time + human think time is roughly equivalent to the RTO value. (E.g. 4-hour RTO)

The Active-Passive design is suitable for most systems because it is relatively simple and cost effective, The two key technology enablers are storage replication and application native replication. Leveraging the storage replication for DR is probably the most popular option because it is application agnostic. The storage replication technology itself is simple, mature and proven and it’s generally regarded to be low risk. The data being replicated between sites can be synchronous (i.e. Zero RPO) or asynchronous (i.e. Non-zero RPO) and both options are just as good depending on the RPO requirement.

As for the application specific replication it will typically utilise TCP/IP network to keep data in-sync between 2 sites. It could also be synchronous or asynchronous depending on the technology and configuration. The underlying replication technology is vendor specific and proprietary so you’d need to rely on the vendor’s tools for monitoring, configuration and problem diagnosis. For example, you may want to implement SQL Server Always On Availability Group for the warm standby DB so you’d have to learn how to manage and monitor Windows Server Failover Cluster (WSFC). Application native replication is often found at the database tier like SQL Server Always On or Oracle Data Guard. Every vendor would have published the recommended DR configuration so it’d be foolhardy not to follow their recommendation.

Active-Cold

Last of all it is about the Active-Cold DR pattern. This pattern is similar to Active-Passive except the software component at the alternate site has not been instantiated. In some case it may require a brand new virtual server for configuring and starting the application component. Or it may need to manually mount the replicated filesystem and then start up the application. Or it may require certain backup restoration process to recover the software to the desired operating state.

The word ‘Cold’ implies much work is needed, and whatever it takes, to bring the service online. In many cases it’ll take hours or even days to complete the recovery task. Hence RTO for Active-Cold design is expected to be larger than Active-Passive. However just because it takes longer to recover doesn’t mean it is a bad solution. For example, it is perfectly acceptable to take one or two days to recover an internal timesheet system without causing much outrage. Put it simply it is “Horses for Courses”. Also you can still achieve Zero RPO (i.e. no data loss) with Active-Cold design by leveraging synchronous storage replication between sites. Not bad at all!

In this article I have covered the common DR related metrics like RTO, RPO and MTTR. I have also shared with you the ‘Single Point of Failure’ resilience design principle which has served me well over the years. I have summarised, perhaps a tad longer than summary, the three common DR design patterns interlaced with practical examples and the patterns are: Active-Active, Active-Passive and Active-Cold. I realised I might have gone a bit longer than expected in this article so I’m saving some of the interesting thoughts and stories for the next article which is focusing on DR implementation.

This article is a guest post by Tommy Tang (https://www.linkedin.com/in/tangtommy/). Tommy is a well rounded and knowledgeable Cloud Evangelist with over 25+ years IT experience covering many industries like Telco, Finance, Banking and Government agencies in Australia. He is currently focusing on the Cloud phenomena and ways to best advise customers on their often confused and thorny Cloud journey.