by Mark | May 26, 2023 | Azure Blobs, Azure FIles, Blob Storage, Storage Accounts

As we continue to journey through 2023, one of the highlights in the tech world has been the evolution of Azure Storage, Microsoft’s cloud storage solution. Azure Storage, known for its robustness and adaptability, has rolled out several exciting updates this year, each of them designed to enhance user experience, improve security, and provide more flexibility and control over data management.

Azure Storage has always been a cornerstone of the Microsoft Azure platform. The service provides a scalable, durable, and highly available storage infrastructure to meet the demands of businesses of all sizes. However, in the spirit of continuous improvement, Azure Storage has introduced new features and changes, setting new standards for cloud storage.

A New Era of Security with Azure Storage

A significant update this year has been the disabling of anonymous access and cross-tenant replication on new storage accounts by default. This change, set to roll out from August 2023, is an important step in bolstering the security posture of Azure Storage.

Traditionally, Azure Storage has allowed customers to configure anonymous access to storage accounts or containers. Although anonymous access to containers was already disabled by default to protect customer data, this new rollout means anonymous access to storage accounts will also be disabled by default. This change is a testament to Azure’s commitment to reducing the risk of data exfiltration.

Moreover, Azure Storage is disabling cross-tenant replication by default. This move is aimed at minimizing the possibility of data exfiltration due to unintentional or malicious replication of data when the right permissions are given to a user. It’s important to note that existing storage accounts are not impacted by this change. However, Microsoft highly recommends users to follow these best practices for security and disable anonymous access and cross tenant replication settings if these capabilities are not required for their scenarios.

Azure Files: More Power to You

Azure Files, a core component of Azure Storage, has also seen some significant updates. With a focus on redundancy, performance, and identity-based authentication, the changes bring more power and control to the users.

One of the exciting updates is the public preview of geo-redundant storage for large file shares. This feature significantly improves capacity and performance for standard SMB file shares when using geo-redundant storage (GRS) and geo-zone redundant storage (GZRS) options. This preview is available only for standard SMB Azure file shares and is expected to make data replication across regions more efficient.

Another noteworthy update is the introduction of a 99.99 percent SLA per file share for all Azure Files Premium shares. This SLA is available regardless of protocol (SMB, NFS, and REST) or redundancy type, meaning users can benefit from this SLA immediately, without any configuration changes or extra costs. If the availability drops below the guaranteed 99.99 percent uptime, users are eligible for service credits.

Microsoft has also rolled out Azure Active Directory support for Azure Files REST API with OAuth authentication in public preview. This update enables share-level read and write access to SMB Azure file shares for users, groups, and managed identities when accessing file share data through the REST API. This means that cloud native and modern applications that use REST APIs can utilize identity-based authentication and authorization to access file shares.

A significant addition to Azure Files is AD Kerberos authentication for Linux clients (SMB), which is now generally available. Azure Files customers can now use identity-based Kerberos authentication for Linux clients over SMB using either on-premises Active Directory Domain Services (AD DS) or Azure Active Directory Domain Services (Azure AD DS).

Also, Azure File Sync, a service that centralizes your organization’s file shares in Azure Files, is now a zone-redundant service. This update means thatan outage in a zone has limited impact while improving the service resiliency to minimize customer impact. To fully leverage this improvement, Microsoft recommends users to configure their storage accounts to use zone-redundant storage (ZRS) or geo-zone redundant storage (GZRS) replication.

Another feature that Azure Files has made generally available is Nconnect for NFS Azure file shares. Nconnect is a client-side Linux mount option that increases performance at scale by allowing you to use more TCP connections between the Linux client and the Azure Premium Files service for NFSv4.1. With nconnect, users can increase performance at scale using fewer client machines, ultimately reducing the total cost of ownership.

Azure Blob Storage: More Flexibility and Control

Azure Blob Storage has also seen significant updates in 2023, with one of the highlights being the public preview of dynamic blob containers. This feature offers customers the flexibility to customize container names in Blob storage. This may seem like a small change, but it’s an important one as it provides enhanced organization and alignment with various customer scenarios and preferences. By partitioning their data into different blob containers based on data characteristics, users can streamline their data management processes.

Azure Storage – More Powerful than Ever

The 2023 updates to Azure Storage have further solidified its position as a leading cloud storage solution. With a focus on security, performance, flexibility, and control, these updates represent a significant step forward in how businesses can leverage Azure Storage to meet their unique needs.

The disabling of anonymous access and cross-tenant replication by default is a clear sign of Azure’s commitment to security and data protection. Meanwhile, the updates to Azure Files, including the introduction of a 99.99 percent SLA, AD Kerberos authentication for Linux clients, Azure Active Directory support for Azure Files REST API with OAuth authentication, and the rollout of Azure File Sync as a zone-redundant service, illustrate Microsoft’s dedication to improving user experience and performance.

The introduction of dynamic blob containers in Azure Blob Storage is another example of how Azure is continually evolving to meet customer needs and preferences. By allowing users to customize their container names, Azure has given them more control over their data organization and management.

Overall, the updates to Azure Storage in 2023 are a testament to Microsoft’s commitment to continually enhance its cloud storage offerings. They show that Azure is not just responding to the changing needs of businesses and the broader tech landscape, but also proactively shaping the future of cloud storage. As we continue to navigate 2023, it’s exciting to see what further innovations Azure Storage will bring.

by Mark | May 25, 2023 | Azure, Azure FIles, Cloud Storage, Cloud Storage Manager, Storage Accounts

Your Key to Fortifying Data Storage and Accessibility in 2023

In the ever-evolving landscape of cloud computing, data redundancy is no longer just an option but a must-have feature for any business looking to fortify its data storage and accessibility. One of the most recent additions to the world of data redundancy is Azure Files’ Geo-Redundancy feature, a 2023 release that’s set to take the world of cloud storage by storm.

What is Azure Files Geo-Redundancy?

To understand Azure Files Geo-Redundancy, let’s first delve into the basics. Azure Files is a managed file share service provided by Microsoft Azure, offering secure and highly available network file shares accessible via the Server Message Block (SMB) protocol. Geo-Redundancy, on the other hand, refers to the replication of data across different geographical regions for the purpose of data protection and disaster recovery.

Azure Files Geo-Redundancy allows for multiple copies of your storage account data to be maintained, ensuring high durability and availability. If your primary region becomes unavailable for any reason, an account failover can be initiated to the secondary region, allowing for seamless business continuity.

GRS and GZRS: Enhancing Your Data Redundancy

Azure Files Geo-Redundancy offers two types of storage options, each with its unique advantages. Geo-Redundant Storage (GRS) makes three synchronous copies of your data within a single physical location in the primary region, and then makes an asynchronous copy to a single physical location in the secondary region. On the other hand, Geo-Zone-Redundant Storage (GZRS) copies your data synchronously across three Azure availability zones in the primary region before making an asynchronous copy to a physical location in the secondary region.

One important distinction to note is that Azure Files does not support read-access geo-redundant storage (RA-GRS) or read-access geo-zone-redundant storage (RA-GZRS). Consequently, the file shares won’t be accessible in the secondary region unless a failover occurs.

Boosting Performance and Capacity with Large File Shares

Another standout feature of Azure Files Geo-Redundancy is its ability to support large file shares. When enabled in conjunction with GRS and GZRS, the capacity per share can increase up to 100 TiB – a whopping 20 times increase from the previous limit of 5 TiB. Additionally, maximum IOPS per share can reach up to 20,000 IOPS, and the maximum throughput per share can reach up to 300 MiB/s. These enhancements significantly improve the performance of your file shares, making them more suitable for data-intensive applications and workloads

Where is Azure Files Geo-Redundancy Available?

As of 2023, Azure Files Geo-Redundancy for large file shares is available in a wide range of regions, including multiple locations in Australia, China, France, Germany, Japan, Korea, South Africa, Sweden, the United Arab Emirates, the United Kingdom, and the United States. This extensive coverage provides businesses with the flexibility to choose the most appropriate locations for their data storage based on their specific needs and compliance requirements

Getting Started with Azure Files Geo-Redundancy

Ready to fortify your data storage with Azure Files Geo-Redundancy? The registration process is simple and can be done via the Azure portal or PowerShell. Once you’re registered, you can easily enable geo-redundancy and large file shares for new and existing standard SMB file shares

The Snapshot and Sync Mechanism

To ensure consistency of file shares when a failover occurs, Azure creates a system snapshot in the primary region every 15 minutes, which is then replicated to the secondary region. The Last Sync Time (LST) property on the storage account indicates the last time data from the primary region was successfully written to the secondary region. However, due to potential geo-lag or other issues, the latest system snapshot in the secondary region might be older than 15 minutes. It’s also important to note that the Last Sync Time isn’t updated if no changes have been made on the storage account, and its calculation can time out if the number of file shares exceeds 100 per storage account

Considerations for Failover

When planning for a failover, there are a few key considerations to keep in mind. Firstly, a failover will be blocked if a system snapshot doesn’t exist in the secondary region. Secondly, file handles and leases aren’t retained on failover, requiring clients to unmount and remount the file shares. Lastly, the file share quota might change after failover as it’s based on the quota that was configured when the system snapshot was taken in the primary region

Practical Use Cases

Azure Files Geo-Redundancy offers myriad benefits that apply to various business scenarios. For organizations dealing with large datasets, the enhanced capacity and performance limits with large file shares can significantly improve their data management capabilities. Companies operating in multiple geographical locations can also benefit from the wide regional availability of the service, allowing them to maintain data proximity and potentially meet certain compliance and regulatory requirements.

Azure Files Geo-Redundancy is a promising new addition to the world of cloud storage, providing businesses with an effective tool to enhance their data redundancy and resilience. With its robust features and capabilities, it’s set to pave the way for more secure, reliable, and efficient data storage in the cloud.

So, whether you’re a small business looking to safeguard your data or a large enterprise aiming to optimize your data infrastructure, Azure Files Geo-Redundancy is a feature worth exploring. Its potential to enhance data storage, accessibility, and redundancy makes it a game-changing solution in the ever-evolving landscape of cloud computing.

Conclusion

Azure Files’ new geo-redundancy feature further enhances the utility of Cloud Storage Manager, a tool that can help users manage their Azure file shares efficiently and cost-effectively. As a fully managed cloud-native file sharing service, Azure Files is designed to be always on and accessible via the standard Server Message Block (SMB) protocol. However, native file share management is an area where it lacks. This is where Cloud Storage Manager shines, providing the necessary tools and interfaces to manage your Azure Files storage with ease. Thus, with the addition of geo-redundancy to Azure Files, Cloud Storage Manager becomes an even more invaluable tool in managing the increased complexity and unlocking the potential cost savings that come with larger, geo-redundant file shares.

In the digital era, data is a business’s most valuable asset. The ability to protect and access that data, especially during unexpected events, is critical. This is where Azure Files Geo-Redundancy shines, offering businesses a robust and flexible solution to secure their data and ensure its availability across different geographical regions. As we move forward, we can only expect Azure Files Geo-Redundancy to become an even more integral part of businesses’ data management strategies, setting the standard for high availability, durability, and security in cloud storage.

by Mark | May 22, 2023 | Azure, Azure Blobs, Cloud Storage, Cloud Storage Manager, Storage Accounts

Introduction to Azure Blob Storage

What is Azure Blob Storage?

Azure Blob Storage is a scalable, cost-effective, and durable cloud storage solution provided by Microsoft Azure. Serving as the backbone for many Azure services, it enables businesses to store a colossal amount of unstructured data ranging from documents, images, backup data, to log files, etc. Azure Blob Storage can handle all your static data that’s stored and read but not changed frequently, making it an indispensable part of any cloud data management strategy.

Components of Azure Blob Storage

In Azure Blob Storage, data resides in storage accounts. These accounts serve as top-level organizational structures that provide a unique namespace for your data. Within storage accounts, we have containers, which function similarly to directories in a file system, holding blobs – the fundamental data entities. Understanding these core components of Azure Blob Storage is crucial to effectively managing and organizing data.

Azure Blob Storage Service Types

Azure Blob Storage offers different service types to cater to varying business needs. The three types of blobs include block blobs for storing text or binary data, append blobs for append operations (ideal for logging scenarios), and page blobs for frequent read/write operations.

The Imperative of Data Security in Azure Blob Storage

Common Scenarios for Data Deletion

Unintentional data deletion in Azure Blob Storage can occur due to various reasons. These range from user errors, like accidental deletion, to policy-based deletions or during data migration processes. Managed Disks, a feature of Azure, can be susceptible to these issues as well. While Azure does provide mechanisms to secure your blob storage, having an extra layer of security like soft delete is invaluable.

Consequences of Unintended Data Loss

Data loss, particularly of critical information, can result in dire consequences for businesses. It could lead to operational disruptions, financial losses, and even regulatory non-compliance, given that certain industries mandate strict data retention policies. This underlines the importance of data loss prevention strategies and backup solutions to safeguard your valuable data stored in Azure Blob Storage.

The Necessity of Robust Data Protection Strategies

Given the potential fallout of unintended data deletion, businesses need to prioritize robust data protection strategies. Features like Azure Storage Service Encryption for data at rest and advanced threat protection can help protect data. One of the most important features that serve as a safety net for data loss due to deletion is Azure Blob Storage Soft Delete.

Azure Blob Storage Soft Delete: A Solution to Unintended Data Deletion

Soft Delete in Azure Blob Storage: An Overview

Soft delete in Azure Blob Storage acts as a recoverable state for blobs. When turned on, it allows blobs or blob versions that have been deleted to be restored, thereby preventing data loss from accidental or unwarranted deletions.

The Working Mechanism of Soft Delete

Soft delete works by maintaining the deleted data in the system for a specified retention period. During this period, the deleted data can be read or recovered, providing a safety net for businesses against data loss. After the retention period, the data is permanently deleted.

Noteworthy Benefits of Soft Delete

Soft delete offers several benefits. Not only does it protect against accidental data loss, but it also aids in maintaining regulatory compliance, particularly in industries that require strict data retention policies. Additionally, with soft delete, businesses can avoid the time and effort that would otherwise be required to recover data from backups.

Activating Soft Delete in Azure Blob Storage

A Stepwise Guide to Enable Soft Delete

Enabling Soft Delete is a simple process involving a few steps. However, it requires careful consideration of the data retention period, which will vary depending on business requirements and potential regulatory obligations.

Important Considerations When Activating Soft Delete

When activating soft delete, businesses should be aware of the increased costs associated with retaining deleted data. Therefore, careful planning of the retention period is vital to balance between data protection and cost efficiency.

How to Retrieve Data Using Azure Blob Storage Soft Delete

The Process of Data Retrieval with Soft Delete

Data retrieval with soft delete involves restoring the deleted blobs or blob versions during the retention period. While the process is straightforward, it does require careful attention to avoid overwriting existing data.

How to Retrieve Data Using Azure Blob Storage Soft Delete

Prerequisites

Before you proceed, ensure that you’ve already enabled Soft Delete on your Azure Blob Storage. If you haven’t done this yet, you can follow the guide here.

Step-by-Step Guide

Step 1: Log into the Azure Portal

To start with, open your web browser and go to the Azure Portal. Enter your credentials to log in.

Step 2: Navigate to your storage account

From the left-hand menu, select “Storage accounts.” This will show you a list of all your storage accounts. Choose the storage account where the deleted blob was located.

Step 3: Open the Blob service

In your storage account window, find and click on “Blob service” under the “Services” section. This will open a list of all your Blob Containers.

Step 4: Locate your blob container

Search for the blob container where your deleted data was stored. Once found, click on it to open.

Step 5: Change the view to show deleted blobs

By default, deleted blobs are hidden from view. To show them, look for the “Show deleted blobs” toggle at the top of the page and turn it on.

Step 6: Find your deleted blob

Now that deleted blobs are visible, scroll through the list or use the search function to locate your deleted blob.

Step 7: Undelete the blob

Once you’ve found your deleted blob, click on the three dots beside it to open a context menu. From there, select “Undelete.”

Now, your deleted blob is restored, and you can access it like before. It’s worth noting that the blob will be restored with the same tier, metadata, and access level it had before deletion.

Conclusion

Retrieving data using Azure Blob Storage Soft Delete is a straightforward process. With just a few clicks, you can restore deleted blobs and protect your business from data loss. It’s essential to have Soft Delete enabled to use this feature. You might want to check Cloud Storage Manager as a tool for managing your Azure storage. It can provide insights into your Azure blob and file storage consumption, generate storage usage reports, and help optimize costs.

Please note that these steps might vary slightly depending on the updates or changes made to Azure after the time of writing this guide (as of May 2023). For the most up-to-date instructions, always refer to the official Microsoft Azure documentation.

Potential Limitations and Considerations

While soft delete is an excellent feature, it is not a substitute for a comprehensive backup strategy. Businesses should also implement robust backup and restore strategies to ensure they can recover from significant data loss scenarios.

Enhancing Data Protection with Cloud Storage Manager

An Introduction to Cloud Storage Manager

Cloud Storage Manager, a powerful solution for managing Azure Blob Storage, can help businesses effectively manage their data, optimize costs, and enhance security.

The Role of Cloud Storage Manager in Enhancing Azure Blob Storage Soft Delete

By providing unique insights and reporting capabilities, Cloud Storage Manager can help businesses optimize the use of Azure Blob Storage Soft Delete, ensuring data protection while minimizing costs.

The Unique Insights and Reporting Capabilities of Cloud Storage Manager

The unique insights and reporting capabilities of Cloud Storage Manager, such as usage trends and cost analysis, can provide businesses with valuable information to make informed decisions about their data management strategies.

Wrapping Up

Azure Blob Storage, with its features like Soft Delete, offers a robust solution for businesses to prevent unintended data loss. Coupled with effective management tools like Cloud Storage Manager, businesses can ensure optimal data protection in Azure Blob Storage.

Frequently Asked Questions

Q1: What is Azure Blob Storage Soft Delete? Azure Blob Storage Soft Delete is a feature that, when enabled, allows you to recover blobs or blob versions that have been deleted. This serves as a crucial safety net against data loss due to accidental or malicious deletions.

Q2: How does Soft Delete work in Azure Blob Storage? Soft delete works by keeping the deleted data in the system for a specified retention period. During this period, the deleted data can be read or recovered. However, once the retention period is over, the data is permanently deleted.

Q3: How can I enable Soft Delete in Azure Blob Storage? Enabling Soft Delete is straightforward, but it requires careful consideration of the data retention period. This period will depend on your business requirements and potential regulatory obligations.

Q4: Can I retrieve data once it’s been permanently deleted? No, once the retention period is over and the data has been permanently deleted, it can no longer be retrieved. This highlights the importance of carefully setting your retention period when enabling Soft Delete.

Q5: What role does Cloud Storage Manager play in managing Azure Blob Storage? Cloud Storage Manager is a powerful tool for managing Azure Blob Storage. It provides unique insights into your data, offers usage trend reports, and helps optimize costs. Additionally, it can help businesses effectively utilize Azure Blob Storage Soft Delete, ensuring both data protection and cost efficiency.

In conclusion, Azure Blob Storage Soft Delete is an essential feature for any business aiming to protect their data from unintended deletion. Leveraging it with powerful tools like Cloud Storage Manager can significantly enhance data protection and cost-efficiency in Azure Blob Storage. Be sure to explore the various features of Azure Blob Storage and how they can help secure and manage your data. For more information on this topic, you can explore these other resources:

Remember, successful data management requires a comprehensive understanding of available tools and features, strategic planning, and constant vigilance.

by Mark | May 19, 2023 | Azure Blobs, Cloud Storage, Storage Accounts

What is Azure Blob Storage REST API?

Introduction to Azure Blob Storage

Azure Blob Storage is a cloud-based storage solution provided by Microsoft as part of its Azure platform. It enables users to store and manage unstructured data such as text, images, videos, and binary data in the cloud. This makes it a highly scalable and cost-effective way to store large amounts of data without having to worry about hardware maintenance or infrastructure management.

Azure Blob Storage is highly available and durable, with multiple copies of data stored across different locations within a region or even across regions for disaster recovery purposes. It also supports various access tiers, including hot, cool, and archive tiers with different pricing models depending on the frequency of access.

Overview of REST API

REST (Representational State Transfer) API is an architectural style for building web services that are lightweight, flexible, and scalable. It uses HTTP methods such as GET, POST, PUT, DELETE to interact with resources identified by URIs (Uniform Resource Identifiers).

Azure Blob Storage REST API follows the REST architectural style for accessing blobs stored in Azure storage accounts. This means that you can use HTTP methods like PUT to upload data into the blob container or GET to download data from it.

REST APIs have several benefits over traditional APIs. Firstly they offer better scalability since they are stateless and have a simple request/response model.

Secondly they enable developers to build powerful applications using lightweight clients like mobile devices or web browsers. RESTful APIs are also language-agnostic which means that you can use any programming language that supports HTTP requests/response protocols to interact with them.

Benefits of Using Azure Blob Storage REST API

The benefits offered by Azure Blob Storage REST API include:

- Scalability: The RESTful architecture ensures your application can scale horizontally without needing additional hardware.

- Flexibility: RESTful APIs are flexible and easy to use which makes it easy to integrate Azure blob storage with other applications.

- Cost-effective: With Azure Blob Storage, you only pay for what you use, and the pricing tiers allow for cost optimization based on usage patterns.

- High Availability: Azure Blob Storage provides multiple copies of data stored across different locations within a region or even across regions for disaster recovery purposes.

- Security: The RESTful API provides several security features such as SSL encryption and SAS (Shared Access Signature) tokens to ensure secure access to resources.

Overall, Azure Blob Storage REST API is a powerful tool that enables developers to store, manage and retrieve large amounts of unstructured data in the cloud. Its ease of use, scalability, and flexibility make it an ideal solution for organizations looking to modernize their data storage infrastructure.

Getting Started with Azure Blob Storage REST API

Creating an Azure Storage Account

If you’re new to Azure, the first step in using the Blob Storage REST API is to create a storage account. This can be done through the Azure portal, or programmatically using an Azure SDK. When creating a storage account, you’ll need to choose a unique name and specify the account type (standard or premium).

You’ll also need to choose the replication type, which determines how your data is stored and replicated across multiple locations for redundancy. Once you’ve created your storage account, you can start using it to store data in blob containers.

Obtaining Access Keys for the Storage Account

To access your storage account from code, you’ll need to obtain two access keys – a primary key and a secondary key. These keys are used for authentication when making requests to the Blob Storage REST API.

To obtain these keys, navigate to your storage account in the Azure portal and click on “Access Keys” under “Settings”. From here, you can copy either key and use it in your code.

Understanding the Structure of a Blob Storage URL

In order to interact with blobs in your storage account via REST API calls, you’ll need to understand how URLs are structured. A typical blob URL has four components:

1) The base URL of your storage account (e.g., https://mystorageaccount.blob.core.windows.net)

2) The container name

3) The blob name (optional)

4) Query parameters (optional)

For example: https://mystorageaccount.blob.core.windows.net/mycontainer/myblob?sv=2021-06-01&st=2022-01-01&se=2022-02-01&sr=b&sp=r&w=bf The query parameters are used to specify the shared access signature (SAS) for the blob, which determines the permissions and expiration time for accessing the blob.

Using Azure SDKs

While it’s possible to interact with Blob Storage REST API directly using HTTP calls and JSON payloads, it might be easier to use one of the various Azure SDKs available in multiple programming languages. These SDKs abstract away many of the details of making REST API requests and handling authentication. The Python SDK for Azure is called “azure-storage-blob” and can be installed via pip.

Testing Your Connection

After creating your storage account, obtaining access keys, understanding URL structure, and possibly configuring an SDK, you can test your connection by uploading a file to a container or downloading a blob. It’s important to note that every action against Blob Storage REST API incurs charges – these charges may vary based on storage account type, region etc. So make sure you know what features cost before using them in production!

Uploading and Downloading Data with Azure Blob Storage REST API

Using HTTP PUT method to upload data to a blob container

Uploading data to an Azure Blob Storage container is simple using the REST API. To upload data, authenticate with your storage account using either your account key or a stored access policy.

Once authenticated, you can create a new blob in the container and upload the data using a HTTP PUT request. You must include the content type and content length headers in your request.

To create a new blob, append the blob name to the container URL. The resulting URL is called the destination URL.

Then, issue an HTTP PUT request that includes the content of your blob in the message body of your request. “`http PUT https://myaccount.blob.core.windows.net/mycontainer/myblob Content-Type: text/plain Content-Length: 11 Hello World “`

This example uploads “Hello World” as plain text to a file named “myblob” in “mycontainer”. If successful, this command returns status code 201 (Created).

Using HTTP GET method to download data from a blob container

Downloading blobs from an Azure Blob Storage container is just as easy as uploading them with REST API. You simply issue an HTTP GET request for any given resource within a container by providing its URL.

To download from Azure Blob Storage using REST API, you must first authenticate by providing either your storage account key or one of its access policies on each request made against resources belonging to that storage account. After authenticating against Azure Blob Storage with either of these methods, you can then issue GET requests against URLs pointing to individual blobs within specified containers or even entire containers themselves.

“`http GET https://myaccount.blob.core.windows.net/mycontainer/myblob x-ms-date: Mon, 27 Jul 2009 12:28:53 GMT x-ms-version: 2009-07-17 “`

This example retrieves the content of a blob named “myblob” in “mycontainer”. If successful, the response message contains the content of the blob along with its HTTP status code.

Uploading and downloading data is a critical part of Azure Blob Storage REST API. Using HTTP PUT method to upload data to a blob container and using HTTP GET method to download data from a blob container is simple once you understand the specific headers required for each request.

Managing Containers with Azure Blob Storage REST API

Creating and Deleting Containers in Azure Blob Storage REST API

Azure Blob Storage REST API allows developers to create and delete containers using HTTP PUT and DELETE methods, respectively. A container is a logical unit of storage in which blobs are stored. To create a new container, developers can send an HTTP PUT request to the URL of the container they want to create.

The name of the container must be unique within the storage account, and it can only contain alphanumeric characters and hyphens. On successful creation, an HTTP status code of 201 (Created) is returned along with the ETag value for the newly created container.

When deleting containers, developers can remove all blobs within it or delete it outrightly. Using a DELETE request will completely remove this container permanently.

Listing All Containers in Azure Blob Storage REST API

Listing all containers within an Azure storage account is made possible by sending an HTTP GET request to a specific URL that lists them out. To retrieve this list, developers need to include their authentication credentials as part of the URL. The response payload contains information about each listed container like its name, properties like metadata, lease status (if any), etag value among others.

Developers can then use this information to make further changes or obtain more information about each particular container. In addition to listing out all containers within an account, developers also have access to listing out only specific subsets under certain criteria such as those created before or after certain dates or those that match specific prefixes.

Caveats

When creating or deleting containers using Azure Blob Storage REST API, it’s important not just to consider performance optimization but also data integrity when working with large amounts of data across multiple accounts simultaneously. Also remember that while there are no restrictions on how many blobs you may store in one storage account, the number of blobs per container is limited to 5000 and maximum size of a single block blob is 200GB.

Conclusion

Managing containers efficiently and effectively within Azure Blob Storage REST API is essential for good data management. Creating new containers, deleting old ones and listing all containers efficiently can save time, space and contribute to a better organized system.

Working with Blobs in Azure Blob Storage REST API

Uploading, Downloading, and Deleting Blobs

Blobs are the fundamental entities stored in Azure Blob Storage. They can contain any type of data, such as text, images, videos, or binary files. In order to upload a blob to Azure Blob Storage using REST API, you need to use the HTTP PUT method with the following URL format: https://{accountname}.blob.core.windows.net/{containername}/{blobname}.

When uploading a blob, you also need to specify its MIME type and any custom metadata associated with it. To download a blob from Azure Blob Storage using REST API, you need to use the HTTP GET method with the same URL format as for uploading.

You then receive a response that contains the content of the blob in its body. If you want to download part of a blob instead of its entire content, you can specify byte ranges in your GET request by setting the Range header.

Deleting blobs from Azure Blob Storage using REST API is done through an HTTP DELETE request that uses the same URL format as for uploading and downloading. Once deleted, blobs cannot be recovered.

Copying Blobs within or Across Storage Accounts

Copying blobs within or across storage accounts is another useful feature provided by Azure Blob Storage REST API. The basic idea is that you can replicate blobs across different containers or accounts without manually downloading them first. To copy a blob within the same storage account using REST API, you can use either synchronous or asynchronous copy operations.

A synchronous copy operation creates an exact replica of an existing blob at another location within the same storage account. An asynchronous copy operation allows you to copy blobs between containers or accounts asynchronously without blocking other operations.

To copy a blob across different storage accounts using REST API requires a two-step process: – First obtain a Shared Access Signature (SAS) token for the source blob.

– Then use the token to initiate an asynchronous copy operation from the source blob URL to the destination blob URL. Note that when copying blobs across storage accounts, you may incur additional network egress charges and higher latency compared to copying within the same storage account.

Use Case: Uploading Images with Azure Blob Storage REST API

One common use case for Azure Blob Storage REST API is uploading images from a web application. For example, you might want to allow your users to upload profile pictures or other visual content without having to store them on your own server.

To achieve this, you can create a container in your Azure Blob Storage account that is publicly accessible. When a user uploads an image through your web application, you can send a PUT request to the container’s URL with the appropriate headers and body data.

Once uploaded, you can obtain the public URL of the image by concatenating its container name and blob name with https://{accountname}.blob.core.windows.net/. By using Azure Blob Storage REST API for image uploads, you not only reduce storage costs but also improve scalability and reliability of your web application.

Best Practices for Working with Blobs in Azure Blob Storage REST API

When working with blobs in Azure Blob Storage using REST API, there are several best practices that you should follow: – Always use HTTPS instead of HTTP to ensure secure data transmission. – Use SAS tokens instead of Access Keys whenever possible to minimize security risks.

– Avoid hardcoding connection strings or keys in your code; instead use environment variables or other secure configuration methods. – Use asynchronous copy operations when copying large blobs or across different storage accounts.

– Consider using CDN (Content Delivery Network) integration for serving frequently accessed blobs faster and closer to end-users. By following these best practices, you can optimize performance and security while minimizing costs when working with blobs in Azure Blob Storage using REST API.

Securing Access to Azure Blob Storage REST API

Implementing Shared Access Signature (SAS) for Secure Access to Blobs

One of the most important aspects of using the Azure Blob Storage REST API is ensuring that your data is secure. The implementation of Shared Access Signature (SAS) allows you to create a secure and time-limited access to a specific blob or container in your storage account.

To implement SAS, you need to generate a token that includes an expiration time, permissions granted, and cryptographic signature. This token can then be appended as a query string parameter to the URL used for accessing the blob or container.

By using SAS, you are able to grant temporary access with limited permissions, minimizing the risk of unauthorized access or misuse of your data. It is important to note that SAS tokens should always be kept private and only shared with trusted parties.

Configuring CORS Rules for Cross-origin Requests

Another important aspect of securing access to Azure Blob Storage REST API is configuring Cross-Origin Resource Sharing (CORS) rules. CORS enables web applications running on different domains than your storage account’s domain name to make requests against it without restriction. Without proper CORS configuration, web browsers may block requests from other websites attempting to access data in your storage account.

This could result in degraded functionality or security vulnerabilities. To configure CORS rules for cross-origin requests, you must specify which domains are allowed and which HTTP methods are supported.

This can be done through Azure Portal or programmatically through REST API calls. By configuring CORS rules correctly, you enable cross-domain requests while maintaining control over which domains can access your data and what type of requests they can make.

Securing access within any system is an essential factor when dealing with sensitive data storage such as blobs in Azure. By implementing Shared Access Signatures (SAS), you limit access to your blobs based on permissions and time limitations, making sure that only the right people have the necessary permissions.

On the other hand, configuring CORS rules for cross-origin requests ensures that web browsers don’t block requests from other websites attempting to access data in your storage account. With SAS and CORS, you can rest assured that your data is secure and accessible to those who are permitted to use it.

Monitoring and Logging in Azure Blob Storage REST API

Enabling logging for diagnostic purposes

When working with Azure Blob Storage, it’s essential to have a way to monitor the activity happening within your storage account. Enabling logging can help you diagnose errors, track usage, and understand how your application is interacting with blob storage.

To enable logging, you must configure your storage account to send log data to an Azure Storage account or a third-party log analytics tool. Once enabled, logs are created for each storage service API operation that occurs in the account.

These logs contain information such as the timestamp of the operation, the user who initiated it, and any error messages associated with it. You can then use these logs to troubleshoot issues or identify trends in usage.

Monitoring performance metrics using metrics APIs

In addition to logging, Azure Blob Storage also provides metrics APIs that allow you to monitor performance and usage over time. These APIs provide data on various aspects of your storage account’s performance, such as transactions per second (TPS), ingress/egress bandwidth utilization, and availability. To use the metrics APIs, you must first enable them for your storage account.

Once enabled, you can retrieve metric data using REST API calls or by accessing them through Azure Monitor. This data can be used to create custom dashboards or alerts based on specific thresholds.

Conclusion

Monitoring and logging are crucial aspects of managing Azure Blob Storage accounts through REST API calls. By enabling logging and utilizing the metrics APIs available through Azure Monitor, developers gain valuable insight into their application’s usage patterns within blob storage accounts which is critical when identifying bottlenecks that could be causing problems for users running applications on these platforms.

When used together effectively with other tools like SAS for secure access control among others mentioned earlier in this article; monitoring and logging can help ensure that your Azure Blob Storage accounts are performing optimally to deliver reliable, scalable storage solutions. So whether you’re an application developer, a cloud architect, or anyone working with blob storage accounts in Azure, implementing monitoring and logging should be a top priority for managing these services effectively.

by Mark | May 17, 2023 | Azure, Azure Blobs, Cloud Storage, Storage Accounts

Azure Service Principals – The Key to Managing Your Azure Resources

Azure Service Principals are a crucial aspect of managing your Azure resources. They provide a secure and efficient way to manage your resources, without the need for human intervention.

In this article, we will explore what Azure Service Principals are, how they work, and why they are important. As you may already know, Azure is a cloud computing platform that allows you to host, deploy and manage your applications and services.

With its vast range of features and capabilities, it can be challenging to manage all of your resources effectively. This is where Azure Service Principals come in.

Azure Service Principals allow you to create and assign roles to an identity that can be used by applications or services that need access to specific resources in your Azure environment. This provides a secure way for these applications or services to access the resources they need while keeping them separate from end-users.

The Importance of Managing Your Azure Resources

Managing your Azure resources can be challenging as there are so many things to keep track of. You need to ensure that everything runs smoothly without any downtime or glitches affecting your end-users’ experience. And you need to protect against cyber threats such as unauthorized access or data breaches.

One way you can do this is by using role-based access control (RBAC). RBAC helps you specify what actions users or groups can perform on specific resources within the scope of their assigned roles.

By using RBAC with Service Principals, you can ensure that only authorized requests have access to critical data. Another advantage of using an Azure Service Principal is scalability – it allows multiple applications or services with varying degrees of access privileges to interact with the same resource securely and efficiently without conflicting with each other’s permissions.

How An Azure Service Principal Works

An azure service principal is essentially an identity created within your Azure Active Directory. It is similar to a user account but is used specifically for applications or services that need to access specific resources within the Azure environment.

So, how does it work? Let’s say you have an application that needs to access a database located in your Azure environment.

You create an Azure Service Principal and assign it the necessary roles and permissions required to access this database. The application can use this service principal’s credentials when connecting to the database securely.

These credentials can be configured with different authentication methods such as certificates or passwords, depending on your security requirements. Additionally, you can customize your service principal further by configuring its expiration date, adding owners or contributors, and applying additional policies and permissions.

Why Use An Azure Service Principal?

Using an Azure Service Principal provides several benefits for managing your Azure resources effectively. For one, it allows you to separate identity from applications or services that require access to resources – reducing risks of unauthorized access by end-users. Service principals also enable efficient delegation of permissions across different roles and allow for centralised management of resource access privileges.

By creating multiple service principals with varying degrees of permissions – you can grant project teams granular control over their own applications/resources without compromising overall data security. Overall: In summary, using an azure service principal as part of RBAC ensures secure management of all elements in your azure environment; thus helping prevent cyber-attacks while improving operational efficiency through streamlined resource management.

What are Azure Service Principals?

Azure Service Principals are an important part of managing Azure resources. They are essentially security objects that allow for non-human, automated tasks to be performed in Azure. In simpler terms, they provide a way for programs and applications to authenticate themselves when interacting with Azure resources.

When it comes to identities in Azure, there are three main types: user accounts, service accounts, and service principals. User accounts are what you or I use to login and interact with the portal.

Service accounts are used for applications that need access to resources but don’t require permissions beyond what is needed to perform their specific task. Service principals, on the other hand, can be thought of as a more specific type of service account – they represent an application or service rather than a user.

Service principals have a unique identifier called an Object ID which can be used to refer to them when assigning roles or permissions within Azure. They can be assigned roles just like users or groups can be – but because they aren’t tied to any one person’s account, they provide a more secure way for programs and applications to interact with resources.

Comparison to other types of identities in Azure

So how do service principals compare to user and service accounts? User accounts have their own set of login credentials and permissions associated with them; they’re meant for interacting with Azure manually through the portal or command line tools like PowerShell.

Meanwhile, service accounts are similar but intended for use by non-human entities such as application pools in IIS. Service principals bridge the gap between these two types by allowing programs and applications authenticating themselves using OAuth 2.0 protocol instead of manually entering credentials each time they need access.

This makes them ideal for scenarios where automation is required – such as CI/CD pipelines where code needs access permission too often without human intervention. Overall Azure Service Principals provide an essential way for non-human entities to interact with Azure resources without compromising on security.

Creating an Azure Service Principal

Azure Service Principal is a type of identity that allows you to manage Azure resources programmatically. It is widely used in automation scripts and applications because it provides secure and fine-grained access to resources. Creating an Azure Service Principal is a straightforward process that involves a few steps.

Step-by-step guide on how to create an Azure Service Principal

There are different ways to create an Azure Service Principal, but the easiest and most common method is using the Azure portal. Here are the steps:

1. Sign in to the Azure portal.

2. Navigate to your subscription and select “Access control (IAM)” from the menu.

3. Click on “Add” and select “Add role assignment” from the dropdown menu.

4. In the “Add role assignment” blade, select a role that you want to assign to your service principal, for example, “Contributor”. You can also create custom roles if needed.

5. In the “Assign access to” section, select “Azure AD user, group or service principal”. 6. Click on “Select” and then click on “Create new”.

Explanation of different authentication methods available

When creating an Azure Service Principal, you have two options for authentication: password-based authentication and certificate-based authentication. Password-based authentication involves creating a client secret which is essentially a password that you use with your application or automation script to authenticate with the service principal. This method is simple and easy but it requires managing passwords which can be challenging at scale.

Certificate-based authentication involves creating a self-signed certificate which is used by your application or automation script as a credential for authenticating with the service principal. This method offers higher security than password-based authentication because certificates can be revoked easily if needed.

In general, certificate-based authentication is recommended for applications that run in secure environments because it provides a higher level of protection. However, password-based authentication is still commonly used in many scenarios because it is easier to manage and implement.

Creating an Azure Service Principal is an easy and important step for managing Azure resources programmatically. By following the above steps and choosing the appropriate authentication method, you can create a secure and scalable identity that provides fine-grained access to your resources.

Assigning Roles to an Azure Service Principal

Azure Service Principals are a powerful tool for managing Azure resources, allowing you to automate the management of your resources without having to manually configure each resource individually. To properly manage your resources with a Service Principal, you need to assign it the appropriate roles. In this section, we’ll take a look at how roles work in Azure and how you can assign them to an Azure Service Principal.

How Roles Work in Azure

In Azure, roles are used to determine what actions users (or identities like service principals) can perform on specific resources. There are several built-in roles that come with different levels of access, ranging from read-only access to full control over the resource.

You can also create custom roles if none of the built-in ones meet your needs. When assigning a role to an identity such as a Service Principal, you will need to specify the scope at which the role should be assigned.

This scope determines which resources the identity has permission for. For example, if you assign a role at the subscription level, then that identity will have that role for all resources within that subscription.

Why Roles Are Important for Managing Resources

Roles are important for managing resources because they provide a way to control who has access and what level of access they have. By assigning appropriate roles to identities like service principals, you can ensure that only authorized individuals or applications have access and can perform actions on those resources.

Without proper role-based access control (RBAC), it becomes difficult and time-consuming to manage permissions for multiple users and identities across multiple resources. Additionally, RBAC helps with compliance requirements by ensuring only authorized personnel have access.

Guide on How to Assign Roles

Assigning roles is relatively simple once you understand how they work and why they are important. To assign a role:

1. Navigate to the Azure portal and open the resource group or resource you want to assign a role to.

2. Click on the “Access control (IAM)” tab on the left-hand side.

3. Click on the “+ Add” button and select “Add role assignment.”

4. Select the role you want to assign from the list of built-in roles or create a custom one. 5. Select your identity, in this case, your Azure Service Principal, and click “Save.”

That’s it! Your Azure Service Principal now has the appropriate role assigned to it for that resource.

Assigning roles is crucial for managing resources in Azure effectively and securely. Understanding how roles work, why they are important, and how to assign them is key to properly using Azure Service Principals for automation and management of resources.

Using an Azure Service Principal with APIs and Applications

The Role of Azure Service Principals in API and Application Authentication

Azure Service Principals provide a secure way to authenticate applications and APIs with Azure resources. By leveraging the OAuth 2.0 protocol for authentication, applications can be authorized to access specific resources in Azure without the need for user credentials or manual intervention.

To use an Azure Service Principal for authentication, you’ll need to create it first. Once created, you can obtain the necessary credentials (client ID, secret, and tenant ID) and use them in your application code to securely access your resources.

Scenarios where Using an Azure Service Principal is Useful

Using an Azure Service Principal with APIs and applications is ideal when you have a multi-tier architecture that requires secure communication between different tiers. For example, consider a web application that needs to communicate with a backend API hosted on Azure Functions or App Service. In this scenario, using an Azure Service Principal allows the web application to securely authenticate with the backend API without exposing any user credentials or relying on manual authentication.

Another common scenario where using an Azure Service Principal is useful is when you’re building automation scripts that need access to different resources in your subscription. By creating a service principal with specific role assignments (e.g., Contributor), your script can automatically access those resources without requiring any human intervention.

Implementing Authentication Using an Azure Service Principal

Implementing authentication using an Azure Service Principal involves obtaining the necessary credentials (client ID, secret, and tenant ID) from your service principal record in the portal or through PowerShell/CLI commands. Once you have these credentials, you can use them in your application code by passing them as parameters during runtime.

Here’s some sample C# code that demonstrates how this works:

var credential = new ClientCredential(clientId, clientSecret); var context = new AuthenticationContext(“https://login.windows.net/” + tenantId);

var result = await context.AcquireTokenAsync(“https://management.azure.com/”, credential); string accessToken = result.AccessToken;

This code obtains an access token using the acquired credentials and the Azure AD OAuth 2.0 authentication endpoint. The acquired access token can then be used to communicate with Azure resources that require authentication.

Best Practices for Using an Azure Service Principal with APIs and Applications

Here are some best practices to follow when using an Azure Service Principal for application and API authentication:

– Avoid hardcoding service principal credentials in your code. Instead, use environment variables or a secure configuration store to manage your secrets.

– Limit the scope of each service principal by assigning only the necessary role assignments based on the required permissions.

– Use RBAC auditing to monitor role assignments on your resources and identify any unauthorized changes.

– Rotate service principal secrets regularly to improve security posture and reduce risk of compromise. By following these best practices, you can ensure that your applications and APIs are securely communicating with Azure resources without exposing any unnecessary risks or vulnerabilities.

Best Practices for Managing Azure Service Principals

Tips on how to secure and manage your service principals effectively

When it comes to managing Azure Service Principals, there are several best practices that you can follow to ensure that they are secure and managed effectively. First and foremost, it’s important to limit the number of service principals that you create. Each service principal represents a potential entry point into your system, so creating too many can be risky.

Make sure you only create the ones you really need and delete any unused ones. Secondly, it’s important to keep track of who has access to your service principals.

This means keeping a log of all the users who have access to each one, as well as regularly reviewing the list of users with access to make sure it is up-to-date. You should also revoke access for anyone who no longer needs it.

Be sure to use strong passwords or keys for your service principals and change them regularly. This will help prevent unauthorized access and keep your system secure.

Discussion on common mistakes to avoid

There are several common mistakes that people make when managing Azure Service Principals. One is creating too many service principals, as mentioned earlier.

Another mistake is granting too many permissions to a single service principal. When this happens, if someone gains unauthorized access they will have broad control over the resources associated with that principal.

Another common mistake is not monitoring activity associated with a particular service principal closely enough. This can lead to security issues going unnoticed until it’s too late.

Failing to revoke unnecessary permissions or deleting unused service principals can also create security vulnerabilities in your system. It’s important for those who manage Azure Service Principals to be aware of these common mistakes so they can avoid them and keep their systems secure.

Conclusion

Azure Service Principals are a powerful tool for managing and securing your Azure resources. They allow you to grant specific permissions to applications and APIs without having to rely on user accounts, which can be a security risk. Creating an Azure Service Principal is straightforward, but it’s important to follow best practices for managing them.

Assigning roles to service principals is critical for ensuring that they have access only to the resources they need. When using service principals with APIs and applications, it’s essential that you choose the appropriate authentication method.

While client secrets are the most common method, they can pose a security risk if not managed properly. To ensure the security of your Azure resources, it’s important to follow best practices when managing your service principals.

For example, make sure that you keep client secrets secure and rotate them regularly. Additionally, monitor your applications and APIs closely for any unusual activity.

Overall, Azure Service Principals are an essential component of any organization’s cloud security strategy. By following best practices when creating and managing them, you can ensure that your Azure resources remain secure while still allowing your applications and APIs to access them as needed.

by Mark | May 16, 2023 | Azure, Azure Blobs, Cloud Storage, Cloud Storage Manager, Storage Accounts

A Comprehensive Guide to Faster, Scalable, and Reliable Storage

Introduction

Are you tired of sluggish load times when uploading or downloading large files? Do you need a reliable and scalable storage solution for your business or personal use?

Look no further than Premium Block Blob Accounts! In this article, we’ll explore what these accounts are and why they’re essential for anyone dealing with large amounts of data.

Definition of Premium Block Blob Accounts

Before diving into the benefits of a Premium Block Blob Account, let’s define what it is. Essentially, it’s a type of storage account offered by Microsoft Azure that allows users to store and manage large unstructured data such as videos, images, audio files, backups, and static websites through block blobs.

Block blobs are used to store massive chunks of data in individual blocks that can be managed independently. When a file is uploaded to a block blob container, the file is split into blocks and uploaded in parallel to maximize upload speeds.

Importance of Using Premium Block Blob Accounts

Now that we’ve defined what Premium Block Blob Accounts are let’s dive into why they’re important. First and foremost, they offer faster upload and download speeds than regular block blob accounts because they use Solid-State Drives (SSDs) instead of Hard Disk Drives (HDDs). This results in improved performance when accessing frequently accessed files or hosting high traffic websites.

Additionally, premium block blob accounts offer higher scalability limits than standard block blob accounts which means more space for your data as needed. Premium block blob accounts offer improved reliability and availability making them ideal for storing critical data such as backups or media files.

With built-in redundancy features like geo-replication across regions and automatic failover options within the same region if one server goes down makes sure there’s never any downtime for your business. Premium Block Blob Accounts are an essential type of storage account that every business or individual dealing with large amounts of data should consider.

With faster speeds, higher scalability and performance, improved reliability and availability – what more could you ask for? Stay tuned to learn more about the benefits, use cases, and how to set up a Premium Block Blob Account.

Benefits of Using Premium Block Blob Accounts

Faster Upload and Download Speeds

When it comes to storing large files such as high-definition videos or large datasets, every second counts. Traditional storage options can be painfully slow when uploading or downloading large amounts of data.

However, with Premium Block Blob Accounts, you can expect lightning-fast speeds that will save you time and frustration. For example, with a Premium Block Blob Account, you can upload and download terabytes of data in a matter of hours rather than days.

Higher Scalability and Performance

One of the most significant benefits of using a Premium Block Blob Account is the ability to scale storage quickly without sacrificing performance. With traditional storage methods, scaling up often leads to reduced performance, which can cause delays and other issues. However, with Premium Block Blob Accounts, you can add or remove capacity as needed while maintaining fast access times.

In addition, as your storage needs grow over time, the system automatically adjusts to meet your demands without any manual intervention required on your end. This means you won’t have to worry about downtime or other disruptions when scaling up your storage capacity.

Improved Reliability and Availability

Another significant benefit of using Premium Block Blob Accounts is increased reliability and availability. With traditional storage methods such as hard drives or external drives, there is always a risk that something could go wrong – whether due to hardware failure or human error.

However, with a Premium Block Blob Account hosted on Microsoft Azure’s global network infrastructure backed by SLAs (service level agreements), you can be confident that your data will always be available when you need it most. Azure provides 99.9% availability for all services including block blob accounts which means even during an outage somewhere else in the world; your content remains globally available via caching points located around the world.

Cost-Effectiveness And Flexible Pricing

Using a Premium Block Blob Account is also cost-effective. You only pay for the storage you use, so there’s no need to worry about overprovisioning or wasting money on unused storage. Additionally, If you are storing less than 100TB of data and can tolerate slightly longer access times, you may opt for a premium tier such as Hot or Cool which offers up to 64TB of storage and other benefits.

Premium Block Blob Accounts offer faster upload and download speeds, higher scalability and performance, improved reliability and availability compared to traditional storage options. With flexible pricing options that only require payment for what you use, it is easy to see why businesses big and small are adopting this cloud-based technology.

Use Cases for Premium Block Blob Accounts

Storing Large Media Files such as Videos, Images, and Audio Files

When it comes to storing media files such as videos, images, and audio files, a Premium Block Blob Account is an excellent choice. Traditional storage solutions often have restrictions on file size or limit the amount of data that can be transferred at once.

With Premium Block Blob Accounts, there are no such limitations. You can upload and download large media files quickly and effortlessly.

Another advantage of storing large media files in a Premium Block Blob Account is the ability to access them from anywhere in the world. This means you can easily share your media with others without having to physically transfer bulky files.

Backing up Critical Data for Disaster Recovery Purposes

Disasters can strike at any time, which is why it’s essential to have backups of your critical data. A Premium Block Blob Account is a reliable option for backing up important information because it provides excellent durability and availability. The process of backing up data in a Premium Block Blob Account is straightforward and secure.

You can automate backups using Azure’s built-in tools or use third-party backup solutions that integrate seamlessly with Azure. In the event of a disaster or system failure, you can restore your data quickly from your Premium Block Blob Account without worrying about losing valuable information.

Hosting Static Websites with High Traffic Volume

If you’re looking for an affordable way to host a static website with high traffic volume, then a Premium Block Blob Account should be at the top of your list. Unlike traditional web hosting solutions that require expensive servers and ongoing maintenance costs, hosting your site on Azure’s cloud-based infrastructure provides scalable performance without breaking the bank. With features like automatic load balancing and content delivery networks (CDNs), static websites hosted on Premium Block Blob Accounts load quickly from anywhere in the world.

You can also take advantage of Azure’s built-in security features such as SSL certificates and role-based access control to keep your website secure. Overall, hosting a static website on a Premium Block Blob Account is an excellent choice for small businesses or individuals who want to create a strong online presence without spending a fortune.

Conclusion

Premium Block Blob Accounts are an excellent option for anyone looking for reliable and scalable storage solutions. Whether you’re storing large media files, backing up critical data, or hosting a static website with high traffic volume, Premium Block Blob Accounts offer numerous benefits that traditional storage solutions cannot match.

Remember to monitor your usage and performance metrics regularly and implement security measures such as encryption at rest and in transit to keep your data safe. With best practices in place, you can enjoy all the advantages of this powerful storage solution while keeping your data secure.

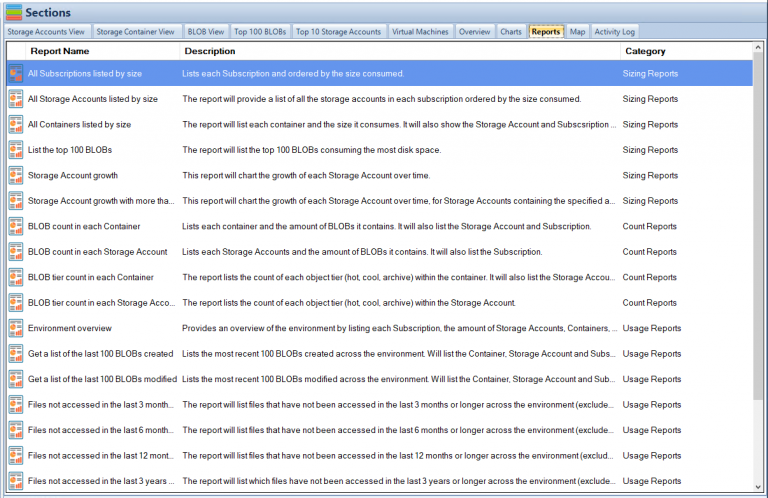

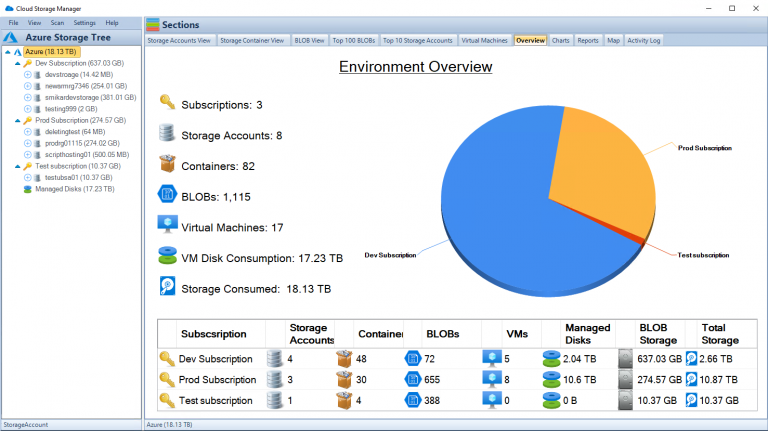

Monitor your Azure Storage Consumption

Use Cloud Storage Manager to monitor and see how much Azure Storage you are consuming, whether its across one subscription or multiple.

With Cloud Storage Manager you can;

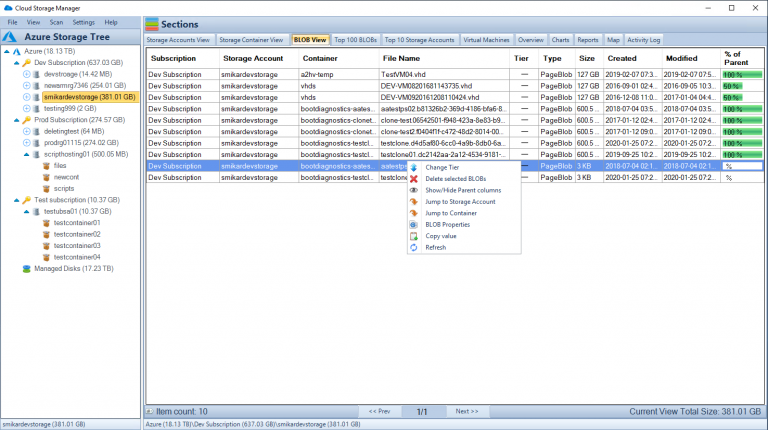

- 🔍 Cloud Storage Manager is a tool for Azure users to gain insights into their storage consumption.

- 🗺️ It provides a world map of Azure locations and an overview of storage consumption.

- 📁 The tool allows users to view and manage storage accounts, containers, and blobs.

- 📋 The File Menu enables search, viewing log files, compressing the database, and exiting the manager.

- 👀 The View Menu allows expanding and collapsing details in the Azure Storage Tree, refreshing the view, and rebuilding the tree.

- 🔄 The Scan Menu enables scanning the entire Azure environment or selected subscriptions, storage accounts, containers, or virtual machines.

- ⚙️ The Settings Menu allows configuring Azure credentials and scheduling automatic scans.

- ❓ The Help Menu provides options for registration, information about the version, and purchasing different editions.

- 🌳 The Azure Storage Tree allows browsing Azure subscriptions, storage accounts, and containers, with right-click options for scanning, refreshing, and creating new storage containers.

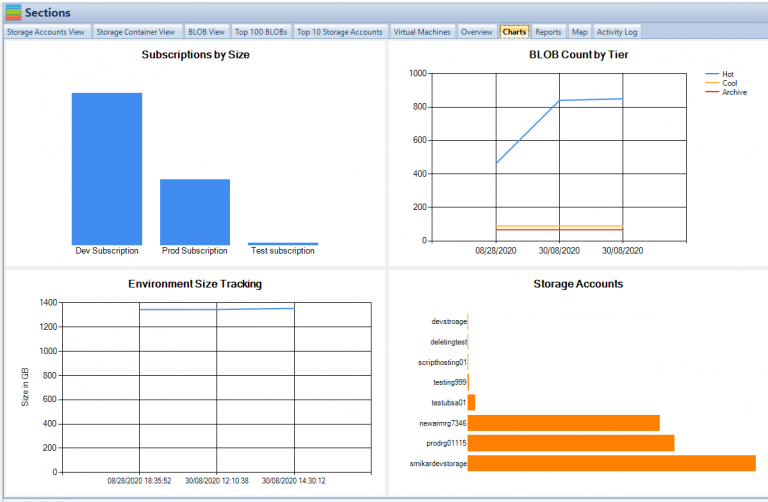

- 📊 Different tabs provide detailed information about storage accounts, containers, blobs, and virtual machines, including their attributes, sizes, and creation/modification dates.

- 📈 The Overview tab shows a summary of Azure storage usage, broken down by subscription.

- 📊 Charts and reports offer graphical representations and detailed information about storage consumption, top blobs, storage account growth, blob counts, file access, virtual machines, and more.

- 🗺️ The Azure Location Storage Map visualizes the worldwide distribution of storage accounts and their consumption.

- 💡 Cloud Storage Manager comes in three editions: Lite, Advanced, and Enterprise, with varying limits on Azure storage size and pricing tiers.

How to Set Up a Premium Block Blob Account

Setting up a Premium Block Blob account is relatively straightforward, but it does require some knowledge of Microsoft Azure and storage concepts. Here are the steps you need to follow to set up your account:

Creating a Microsoft Azure Account

Before you can create a Premium Block Blob account, you will need to create a Microsoft Azure account if you don’t have one already. You can sign up for an account on the Azure website. Once you have an account, you will need to log in to the Azure portal.

Choosing the Appropriate Storage Tier

After logging in, navigate to the storage accounts section of the portal and select “Create.” You will be prompted to choose a storage account tier from among four options: Standard HDD, Standard SSD, Premium SSD, and Blob Storage. If you plan on using your Premium Block Blob account for storing large media files or other data-intensive applications that require high performance and low latency access times, then selecting “Premium SSD” may be suitable for your needs.

Configuring Access Keys and Permissions

Once you have chosen the appropriate storage tier for your needs, click on “Review + Create” at the bottom of the page. This will take you through several configuration options such as choosing between locally redundant storage (LRS) or geo-redundant storage (GRS), setting up virtual networks or firewalls around it etc.

Complete setup by clicking “Create,” which will provide access keys that allow access from apps that use them. These keys should be kept secure since they allow full control over data stored in your Premium Block Blob accounts.

It’s important to also configure permissions so that only authorized individuals or applications can access your data. You can do this by setting up role-based access control (RBAC) policies or configuring Azure Active Directory (AAD) integration.

Setting up a Premium Block Blob account can be an excellent investment for your business or personal storage needs. By following these guidelines, you will be able to create an account that meets your specific requirements and provides fast, reliable access to your data.

Best practices for managing Premium Block Blob Accounts

As with any type of storage solution, managing your Premium Block Blob Account effectively is crucial to its success. Here are some best practices to consider:

Monitoring usage and performance metrics

One of the key advantages of Premium Block Blob Accounts is their scalability and performance. However, in order to ensure that your account is meeting your needs, it’s important to regularly monitor its usage and performance metrics.

This can be done through Azure’s built-in monitoring tools, which provide real-time data on things like storage capacity, IOPS (input/output operations per second), and bandwidth usage. By keeping a close eye on these metrics, you can quickly identify any issues or potential bottlenecks and take proactive steps to address them.

Implementing security measures such as encryption at rest and in transit

Data security is always a top concern when it comes to storing sensitive information in the cloud. Thankfully, there are several ways to enhance the security of your Premium Block Blob Account.

One key measure is implementing encryption for both data at rest (i.e., stored within the account) and data in transit (i.e., being transferred between the account and other services). Azure offers multiple options for encrypting your data using industry-standard protocols like AES-256, making it easy to secure your files no matter how they’re being used.

Optimizing storage costs by leveraging features such as tiered storage

Another important aspect of managing a successful Premium Block Blob Account is optimizing storage costs. While these accounts offer excellent performance and scalability benefits, they can also be more expensive than other types of cloud storage solutions. To mitigate this cost factor, it’s important to leverage features like tiered storage.

This allows you to move less frequently accessed data into lower-cost tiers without sacrificing performance or reliability. Additionally, regularly reviewing your usage patterns and adjusting your storage allocation as needed can help ensure that you’re only paying for what you actually need.

Common Misconceptions About Premium Block Blob Accounts

It’s Only Necessary for Large Enterprises with Massive Amounts of Data

One of the biggest misconceptions about Premium Block Blob Accounts is that they are only necessary for large enterprises that deal with massive amounts of data. While it is true that these types of accounts are beneficial for large companies, they can also be useful for small to medium-sized businesses and even individuals who have a need for fast, reliable, and scalable storage. For example, if you have a small business that deals with video production or graphic design, you may find yourself needing to store and access large media files frequently.