by Mark | Feb 7, 2023 | Azure, Azure Blobs, Azure FIles, Blob Storage, Security, Storage Accounts

Learn how to keep your data secure with Azure Storage security

In today’s digital world, data security is a top priority for businesses and individuals alike. With the increasing popularity of cloud computing, many organizations are relying on cloud storage services to store their sensitive information. Microsoft Azure Storage is one of the most popular cloud storage services, offering a range of storage solutions to meet the needs of different users. However, with the growing number of cyber threats, it’s essential to ensure that your data is secure in the cloud. In this article, we’ll explore Azure Storage security and the best practices you can follow to keep your data safe.

What is Azure Storage Security?

Azure Storage security is a set of features and tools provided by Microsoft Azure to ensure the security of your data stored in the cloud. Azure Storage security helps you protect your data from unauthorized access, theft, and other security threats. The security features provided by Azure Storage include encryption, access controls, monitoring, and more.

Best Practices for Azure Storage Security

To ensure the security of your data stored in Azure Storage, it’s essential to follow best practices. Here are some of the most important ones:

- Encryption: Azure Storage supports encryption at rest, which means your data is encrypted when it is stored on disk. This helps to prevent unauthorized access to your data even if someone gains access to your storage account.

- Access controls: You can use Azure Active Directory (AD) or Shared Access Signatures (SAS) to control access to your storage accounts. Azure AD allows you to manage access to your storage accounts through role-based access controls, while SAS allows you to grant limited access to specific resources in your storage accounts.

- Monitoring: Azure Storage provides a range of monitoring tools that you can use to monitor your storage accounts. You can use Azure Monitor to monitor the performance of your storage accounts, and Azure Activity Logs to track events and changes in your storage accounts.

- Backups: It’s essential to regularly back up your data stored in Azure Storage to ensure that you can recover your data in the event of a disaster. Azure Backup provides a range of backup solutions that you can use to back up your data stored in Azure Storage.

Encryption in Azure Storage

Encryption is an essential aspect of Azure Storage security. Azure Storage supports encryption at rest, which means that your data is encrypted when it is stored on disk. You can use Azure Storage Service Encryption (SSE) to encrypt your data automatically, or you can use Azure Disk Encryption to encrypt your virtual machines’ disks.

Access Controls in Azure Storage

Access controls are an important part of Azure Storage security. Azure Storage provides two main access control mechanisms: Azure Active Directory and Shared Access Signatures.

Azure Active Directory allows you to manage access to your storage accounts through role-based access controls. This means that you can assign different roles to different users, such as Read-Only, Contributor, and Owner. Click here to see how to setup Azure AD and Storage Accounts.

Shared Access Signatures allow you to grant limited access to specific resources in your storage accounts. You can use SAS to grant access to your storage accounts to specific users, applications, or services for a specified period of time.

Monitoring in Azure Storage

Monitoring is an important aspect of Azure Storage security. Azure Storage provides a range of monitoring tools that you can use to monitor your storage accounts. You can use Azure Monitor to monitor the performance of your storage accounts, including metrics such as storage usage, request rates, and response times. Additionally, you can use Azure Activity Logs to track events and changes in your storage accounts, such as changes to access control policies, data deletion, and more. By monitoring your storage accounts, you can detect and respond to security threats in real-time.

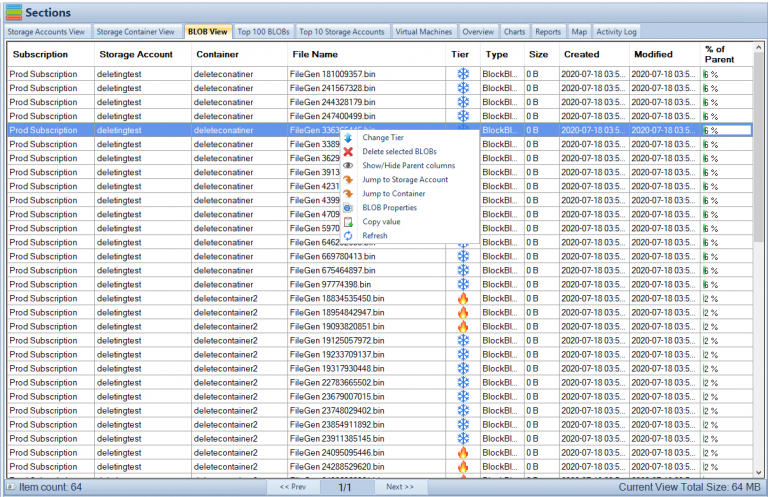

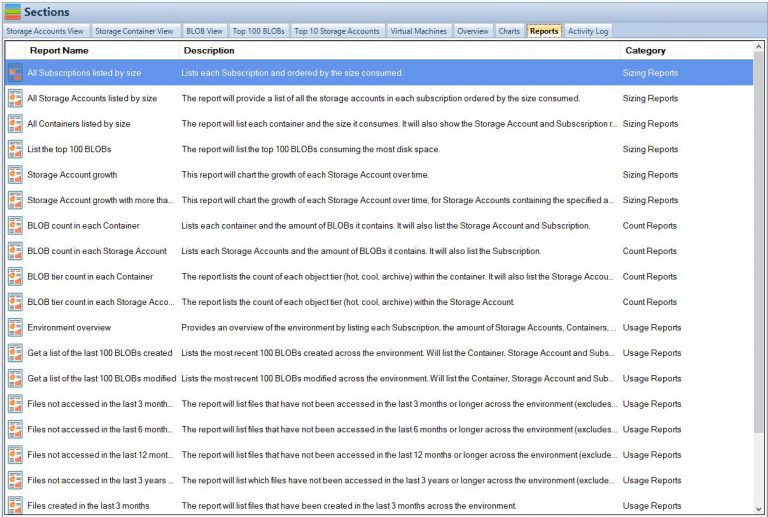

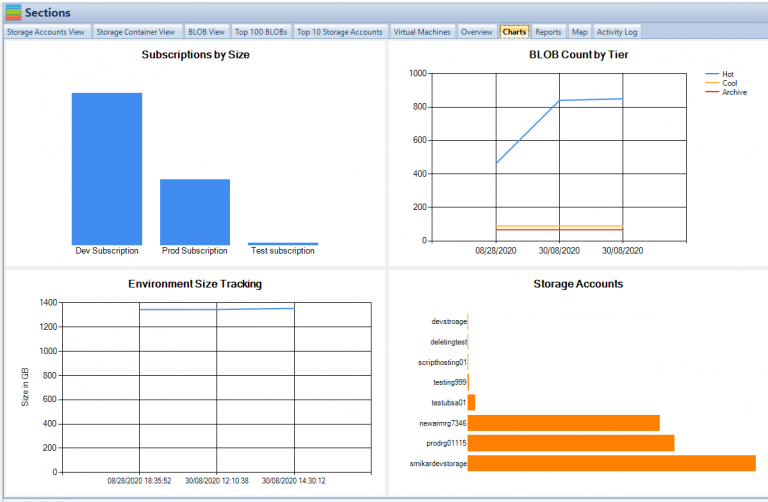

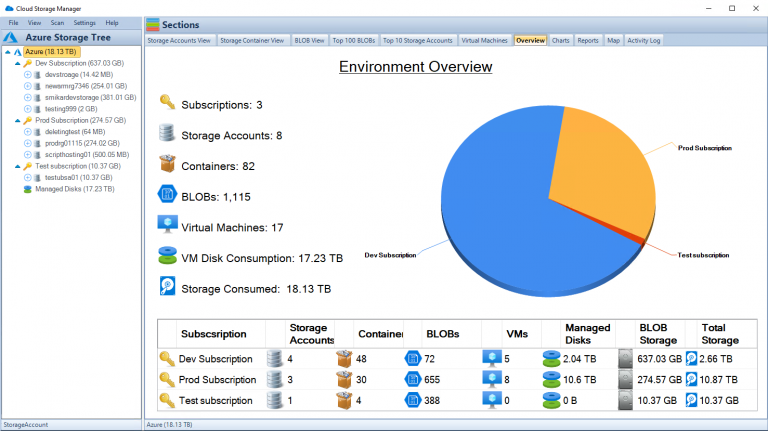

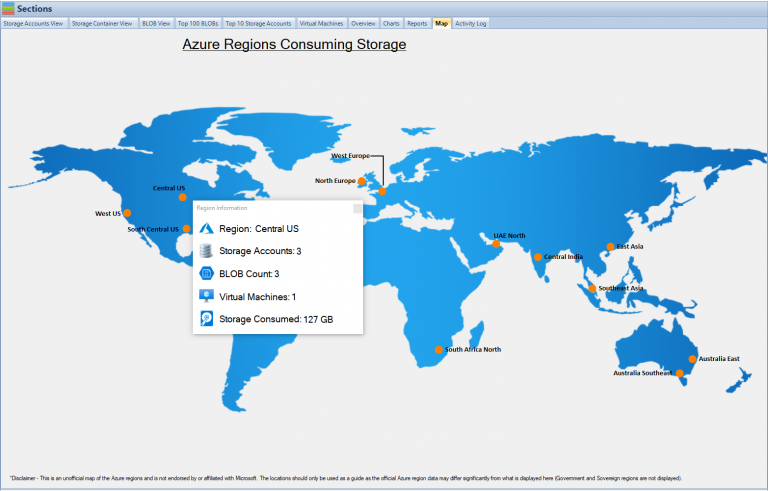

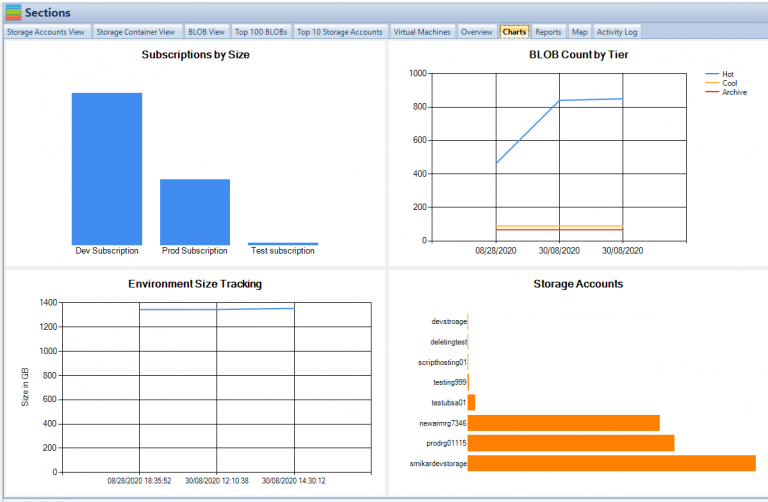

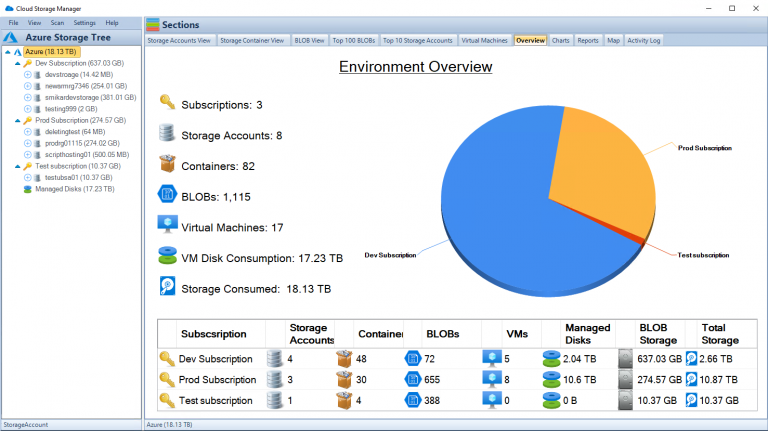

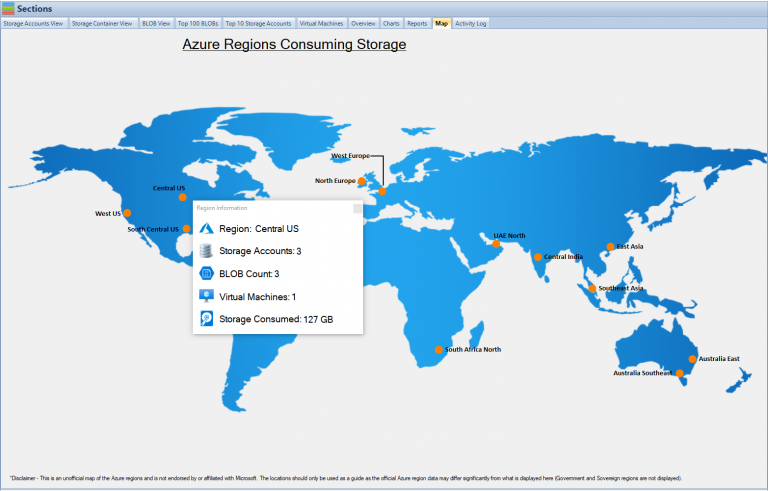

You should also monitor the growth of your storage accounts, by using a tool like Cloud Storage Manager, to provide you with analytics on your Azure Storage.

Backups in Azure Storage

Regular backups are critical to ensure that you can recover your data in the event of a disaster. Azure Backup provides a range of backup solutions that you can use to back up your data stored in Azure Storage. Whether you need to back up your data stored in Blob storage, File storage, or Queue storage, Azure Backup has a solution that meets your needs. Additionally, Azure Backup integrates seamlessly with other Azure services, such as Azure Site Recovery, to provide a comprehensive disaster recovery solution.

Risks not securing your Azure Storage

There are several potential risks and consequences of not securing data stored in Azure Storage. Some of the most significant ones are:

- Data Breaches: Unsecured data stored in Azure Storage is vulnerable to unauthorized access, theft, and other security threats. This can result in sensitive information being exposed, causing damage to a business’s reputation and potentially leading to legal consequences.

- Compliance Violations: Depending on the type of data being stored, businesses may be required to comply with various regulations such as HIPAA, PCI DSS, or GDPR. Failing to secure data stored in Azure Storage can result in non-compliance and penalties.

- Financial Losses: Data breaches can result in financial losses due to the cost of responding to the breach, restoring the data, and repairing damage to the business’s reputation.

- Loss of Confidence: Data breaches can erode trust in a business and result in a loss of confidence among customers, partners, and stakeholders.

- Competitive Disadvantage: Unsecured data stored in Azure Storage can provide a competitive advantage to other businesses who are able to access and use the data for their own gain.

- Intellectual Property Loss: Unsecured data stored in Azure Storage can result in the loss of intellectual property, such as trade secrets and confidential information, to unauthorized third parties.

Therefore, it is essential to secure data stored in Azure Storage by following best practices, such as encryption, access controls, monitoring, and regular backups.

Frequently Asked Questions about Azure Storage Security

- How does Azure Storage protect my data from unauthorized access?

Azure Storage protects your data from unauthorized access through a combination of network security, access control policies, and encryption. Network security measures such as virtual networks and firewalls help prevent unauthorized access to your data over the network. Access control policies, such as shared access signatures, allow you to control who has access to your data, and when. Encryption of both data at rest and data in transit helps ensure that even if your data is accessed by unauthorized parties, it cannot be read or used.

- Is Azure Storage secure for storing sensitive data?

Yes, Azure Storage can be used to store sensitive data, and Microsoft provides a range of security features and certifications to help ensure the security of your data. Azure Storage supports encryption of data at rest and in transit, as well as access control policies, network security, and audits. Additionally, Azure Storage is certified under a number of security and privacy standards, including ISO 27001, SOC 1 and SOC 2, and more.

- How can I be sure that my data is not accidentally deleted or modified in Azure Storage?

Azure Storage provides several features to help prevent accidental deletion or modification of your data, such as soft delete and versioning. Soft delete allows you to recover data that has been deleted for a specified period of time, while versioning helps you maintain a history of changes to your data and recover from unintended modifications. Additionally, Azure Backup provides a range of backup solutions that you can use to back up your data stored in Azure Storage.

- What is Azure Storage and why is it important to secure it?

Azure Storage is a cloud storage service provided by Microsoft Azure. It offers various storage options such as Blob storage, Queue storage, Table storage, and File storage. Azure Storage is important because it is used to store and manage large amounts of data in the cloud. The data stored in Azure Storage can be sensitive, such as financial information, personal information, and confidential business information. Therefore, securing this data is crucial to prevent unauthorized access, data theft, and data breaches.

- What are some common security threats to Azure Storage?

Unauthorized access: Azure Storage data can be accessed by unauthorized individuals if the storage account is not properly secured. This can result in sensitive information being stolen or altered.

Data breaches: A data breach can occur if an attacker gains access to the Azure Storage account. The attacker can steal, alter, or delete the data stored in the account.

Man-in-the-middle attacks: An attacker can intercept data transmitted between the Azure Storage account and the user. The attacker can then steal or alter the data.

Malware attacks: Malware can infect the Azure Storage account and steal or alter the data stored in it.

- How can Azure Storage be secured?

Azure Storage account encryption: Data stored in Azure Storage can be encrypted to prevent unauthorized access. Azure offers several encryption options, including Azure Storage Service Encryption and Azure Disk Encryption.

Access control: Access to the Azure Storage account can be controlled using Azure Active Directory (AD) authentication and authorization. Azure AD can be used to manage who can access the data stored in the Azure Storage account.

Network security: Azure Storage can be secured by restricting access to the storage account through a virtual network. This can be achieved using Azure Virtual Network service endpoints.

Monitoring and auditing: Regular monitoring and auditing of the Azure Storage account can help detect security incidents and respond to them promptly. Azure provides various tools for monitoring and auditing, including Azure Log Analytics and Azure Activity Logs.

- What are the consequences of not securing Azure Storage?

Loss of sensitive information: Unsecured Azure Storage accounts can result in sensitive information being stolen or altered, leading to a loss of trust and reputation.

Financial loss: Data breaches can result in financial losses, such as the cost of investigations, lawsuits, and compensation to affected individuals.

Compliance violations: If sensitive data is not properly secured, organizations may be in violation of various regulations, such as the General Data Protection Regulation GDPR) and the Payment Card Industry Data Security Standard (PCI DSS).

Business interruption: A security incident in the Azure Storage account can result in downtime, which can impact business operations and lead to loss of revenue.

Final thoughts about Azure Storage Security

Azure Storage is a highly secure and reliable cloud storage solution that provides a range of security features to help protect your data from unauthorized access, accidental deletion or modification, and more. Whether you are storing sensitive data or simply need a secure and reliable storage solution for your data, Azure Storage is a great choice. With regular backups, network security measures, encryption, and access control policies, you can be sure that your data is safe and secure in Azure Storage.

by Mark | Feb 6, 2023 | Azure, Azure FIles

Azure File Shares: A Beginner’s Guide

Azure File Shares is a cloud-based file sharing service that provides a secure, scalable, and highly available solution for storing and sharing files in the cloud. With Azure File Shares, you can store, share, and access files from anywhere, at any time, and from any device. Whether you’re a small business owner, a freelancer, or an enterprise-level organization, Azure File Shares can help you meet your file storage and sharing needs.

What are Azure File Shares?

Azure File Shares is a part of the Microsoft Azure platform and is designed to provide a scalable and highly available file storage solution in the cloud. With Azure File Shares, you can store files of any size and type, including documents, images, videos, and audio files. You can also share files with others, either by granting them access to your file share or by sending them a link to the file.

One of the key benefits of Azure File Shares is that it provides a high degree of security. All files stored in Azure File Shares are encrypted at rest, and you can control access to your files by setting permissions. You can also monitor access to your files and audit usage with Azure Activity Logs.

How Does Azure File Shares Work?

Azure File Shares uses the Server Message Block (SMB) protocol to allow users to access files in the cloud. SMB is a standard protocol used for sharing files and printers on a local network. When a user accesses a file share, Azure File Shares uses SMB to connect the user to the file share and allow them to access the files.

Benefits of Azure File Shares

There are many benefits to using Azure File Shares, including:

- Scalability: Azure File Shares can scale to meet the needs of any organization, whether it’s a small business or a large enterprise.

- Accessibility: Azure File Shares allows users to access files from anywhere, at any time, as long as they have an internet connection.

- Collaboration: Azure File Shares makes it easy for multiple users to share files and collaborate on projects.

- Security: Azure File Shares uses industry-standard security measures to protect files and keep them safe from unauthorized access.

Getting Started with Azure File Shares

To get started with Azure File Shares, you’ll need to create an Azure account and sign in to the Azure portal. Once you’re signed in, you can create a new file share by following these steps:

- Click on the “Create a resource” button in the Azure portal.

- Select “Storage” from the list of available resources.

- Choose “Storage account” as the type of storage account you want to create.

- Fill in the required information, such as the name of your storage account, the subscription you want to use, and the resource group you want to use.

- Click the “Create” button to create your storage account.

Once your storage account is created, you can create a new file share by following these steps:

- Navigate to your storage account in the Azure portal.

- Click on the “File shares” option in the left-side menu.

- Click the “Add” button to create a new file share.

- Fill in the required information, such as the name of your file share and the quota for the file share.

- Click the “Create” button to create your file share.

Storing and Sharing Files in Azure File Shares

With Azure File Shares, you can store and share files in a number of different ways. Here are a few of the most common ways to store and share files in Azure File Shares:

Storing Files

To store files in Azure File Shares, you can simply drag and drop files into your file share in the Azure portal, or you can use the Azure File Share REST API to programmatically upload files. You can also use the Azure File Sync service to synchronize your on-premises file servers with your Azure File Shares, allowing you to store and access your files from anywhere.

Alternatively, you can map a drive from your server or workstation so you can access all the file directly.

Analyzing Azure File Shares

To really optimize the cost of your cloud storage and make sure you’re not wasting money on unnecessary files, you need to have a good understanding of what files or blobs have or have not been used.

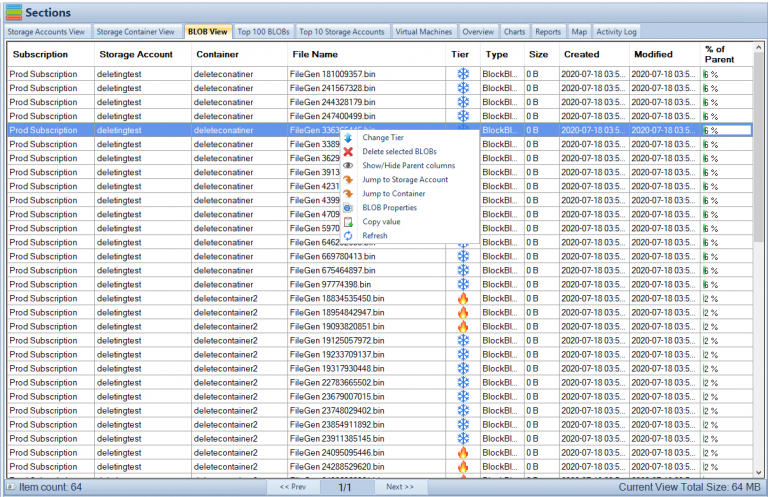

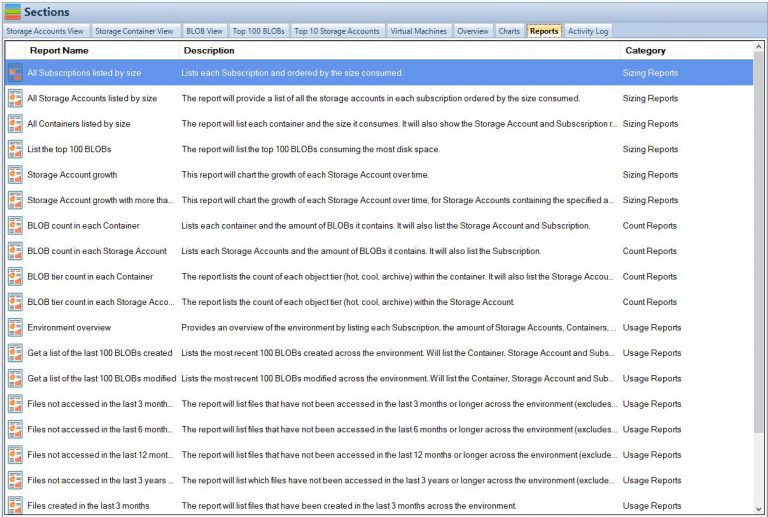

This is where Cloud Storage Manager comes in. Cloud Storage Manager is a software that provides analytics of both Azure Blob and File storage, allowing the user to see which files or blobs have or have not been used, so that they can reduce the cost of their cloud storage. With this software, you can easily identify and delete unnecessary files to save on storage costs.

To learn more about Cloud Storage Manager and start optimizing your Azure File Shares, visit https://www.smikar.com/cloud-storage-manager/

What are the limitations of Azure File Shares?

- Maximum file size: The maximum size for a single file in an Azure File Share is 1 TB.

- Maximum file share size: The maximum size for an Azure File Share is 5 TiB (5,120 GB).

- Maximum IOPS per share: A single Azure File Share can support up to 1000 IOPS (input/output operations per second).

- Maximum throughput per share: A single Azure File Share can support up to 60 MB/s (megabytes per second) of throughput.

- Maximum number of files: The maximum number of files that can be stored in an Azure File Share is not limited by Azure. However, the performance of the file share may be impacted by a large number of small files.

- Limitations on filenames and path length: Azure File Shares have restrictions on the length of filenames and paths. Filenames must be between 1 and 255 characters in length, and the total length of the path to the file (including the share name, directories, and filename) must be less than 4096 characters.

- Limitations on naming conventions: Azure File Shares have restrictions on naming conventions for files and directories. Names cannot contain certain special characters and names must be unique within a directory.

Conclusion

In conclusion, Azure File Shares is a highly beneficial and cost-effective solution for businesses and individuals who require cloud storage. With its scalability, reliability, and security features, it offers a comprehensive solution for storing, accessing and sharing files. Whether you’re a small business, a large corporation, or an individual, Azure File Shares can help you store and manage your data in the cloud.

Read our further blog posts on Azure Files, from saving money, or what are Azure files.

If you’re looking to optimize your Azure storage costs, it’s essential to have a tool that can help you monitor your usage and identify unused files or blobs. This is where Cloud Storage Manager comes in, providing analytics of both Azure Blob and File storage, allowing you to see what files or blobs have or have not been used, to reduce the cost of your cloud storage.

So, if you’re looking for an easy-to-use and cost-effective solution for storing and managing your data in the cloud, Azure File Shares is the perfect choice. And if you want to take it a step further and optimize your Azure storage costs, be sure to check out Cloud Storage Manager at https://www.smikar.com/cloud-storage-manager/.

by Mark | Feb 5, 2023 | Azure, Azure Tables

Azure Tables overview

Azure Tables is a NoSQL cloud-based data storage service provided by Microsoft. It allows users to store and retrieve structured data in the cloud, and it is designed to be highly scalable and cost-effective.

Azure Tables are used for a variety of purposes, including:

- Storing large amounts of structured data: Azure Tables are designed to store large amounts of structured data, making it a good option for big data workloads.

- Building highly scalable applications: Azure Tables are highly scalable, making it a good option for building applications that need to handle a large number of users or requests.

- Storing semi-structured data: Azure Tables can store semi-structured data, making it a good option for storing data that doesn’t fit well in a traditional relational database.

- Storing metadata: Azure Tables are commonly used to store metadata, such as the properties of a file or image.

- Storing log data: Azure Tables can be used to store log data, that can be later used for analysis and troubleshooting.

- Storing session data for Web application: Azure Tables can be used as a session state provider for web applications

- Storing non-relational data: Azure Tables is good for storing non-relational data, such as data from IoT devices, mobile apps, and social media platforms.

- Storing hierarchical data: Azure tables can be used to store hierarchical data, such as data from a tree-like structure.

In summary, Azure Tables are a cost-effective, highly scalable, and flexible data storage service that is well suited for storing large amounts of structured and semi-structured data. It can be used for different purposes and can be integrated easily with other Azure services.

What are the best practices when using Azure Tables?

When using Azure Tables, it’s important to follow best practices to ensure that your data is stored efficiently, securely, and cost-effectively. Some best practices to keep in mind include:

- Use partition keys and row keys effectively: When designing your Azure Tables, it’s important to choose appropriate partition keys and row keys to ensure that your data is stored efficiently. This will ensure that your data is spread across multiple storage nodes, which can help to improve performance and reduce costs.

- Use indexing: Azure Tables supports indexing, which can help to improve the performance of queries and reduce the number of requests made to the service. Be mindful of the cost of indexing and the size of the index.

- Use batch operations: Azure Tables allows you to perform batch operations, which can help to reduce the number of requests made to the service and improve performance.

- Use the appropriate storage tier: Azure Tables offers several storage tiers, including the standard storage tier, and the premium storage tier. Choosing the appropriate storage tier for your workload can help to reduce costs.

- Use Azure’s built-in security features: Azure Tables includes built-in security features such as Azure Active Directory (AAD) authentication, and access controls that can be used to secure your data.

- Use Azure’s built-in cost optimization tools: Azure provides a number of built-in tools that can help you optimize your storage costs, such as the Azure Cost Management tool.

- Monitor and analyze usage metrics: To ensure that your Azure Tables are being used efficiently and effectively, it’s important to monitor and analyze usage metrics such as storage usage, request rate, and error rate.

- backup your data: it’s important to backup your data to avoid data loss and to have a disaster recovery plan.

By following these best practices, you can ensure that your Azure Tables are used efficiently, securely, and cost-effectively.

How to further save money with Azure Tables:

- Use Azure Reserved Instances: Reserved Instances allow you to pre-pay for a certain amount of storage for a period of time, which can result in significant savings.

- Use Azure’s pay-as-you-go pricing model: Azure’s pay-as-you-go pricing model allows you to only pay for the storage that you actually use. This can be a cost-effective option for users who don’t need a large amount of storage.

- Take advantage of Azure’s free trial: Azure offers a free trial that allows users to test out the service before committing to a paid subscription.

- Use Azure cool storage for infrequently accessed data: By using Azure cool storage for infrequently accessed data, you can reduce the cost of storing that data.

Azure Tables conclusion

Azure Tables is a NoSQL data storage service that allows users to store and retrieve structured data in the cloud. It is designed to be highly scalable and cost-effective. By understanding the different storage options available, using Azure Data Box, and taking advantage of Azure’s built-in cost optimization tools, users can efficiently reduce

by Mark | Feb 4, 2023 | Azure, Azure FIles, Storage Accounts

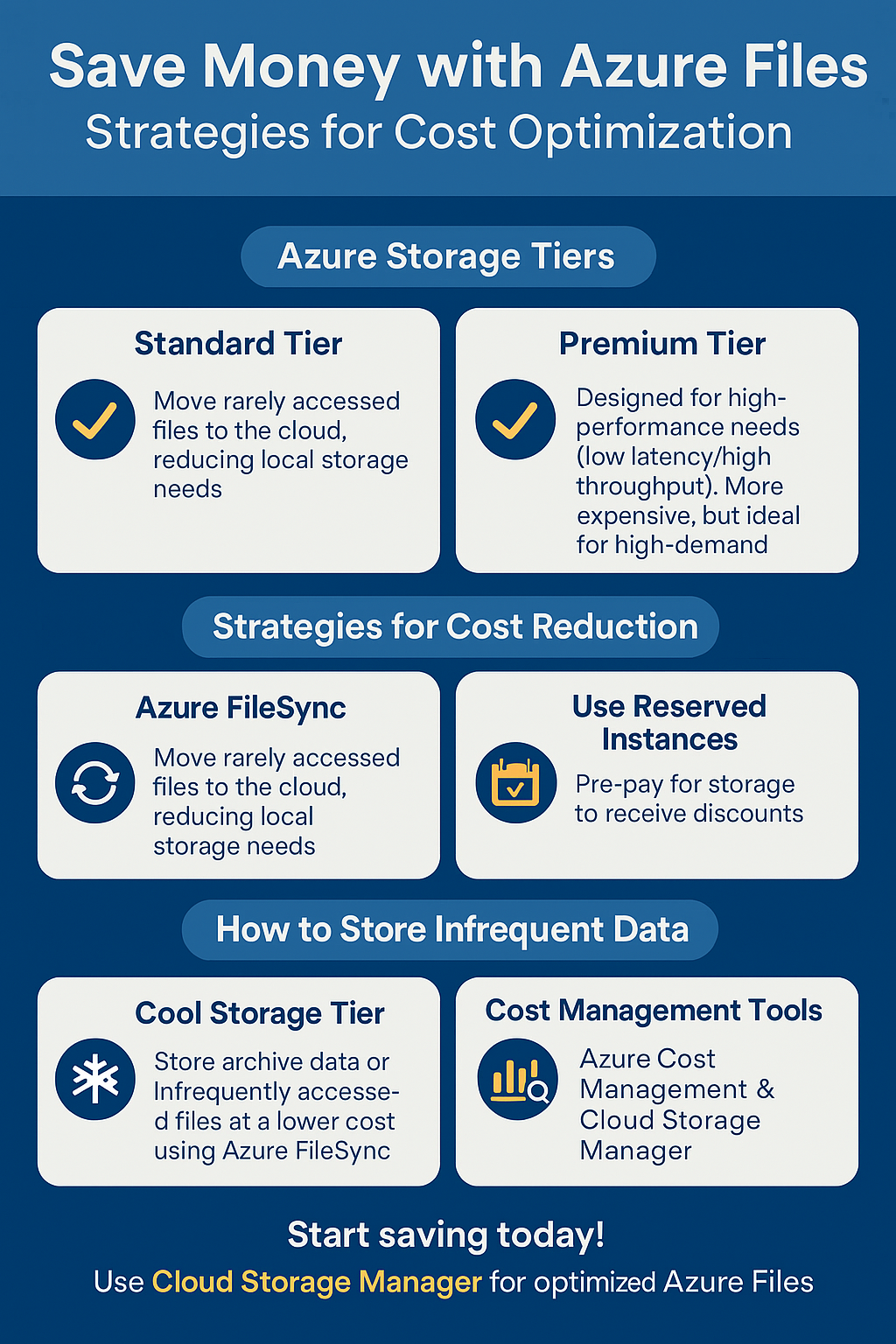

How to Save Money with Azure Files

Azure Files is a fully managed file share in the cloud, offering users the ability to store and access files, similar to traditional file servers. By leveraging Azure’s cloud infrastructure, you can significantly reduce storage costs while gaining flexibility and scalability.

In this guide, we will explore several effective strategies for reducing your Azure Files costs and ensuring you are using the most cost-efficient methods for your file storage needs.

How Azure Files Works

Azure Files is built on the same technology as Azure Blob Storage, but with added support for SMB and NFS protocols. This allows you to easily migrate existing file-based applications to the cloud without making any code changes. It supports built-in data protection, encryption, and access control features.

One key benefit of Azure Files is its flexibility. Users can create multiple file shares and access them from anywhere. Additionally, Azure offers several tools and features that can help you optimize your storage usage and reduce costs. Let’s explore some of the most effective strategies to save money with Azure Files.

1. Choose the Right Storage Tier

Azure Files offers two primary storage tiers: Standard and Premium. By selecting the right storage tier for your workload, you can optimize your costs:

- Standard Tier: Best for general-purpose file shares with infrequent access. It’s a cost-effective solution for most workloads.

- Premium Tier: Ideal for high-performance workloads requiring low latency and high throughput. This tier is more expensive, so it should be used when performance is a priority.

2. Use Azure FileSync

Azure FileSync allows you to tier files to Azure Files, Azure Blob Storage, or both. By storing only the active files on the local file server, you can reduce costs by offloading rarely accessed files to the cloud.

This feature helps reduce the costs associated with storing data that is infrequently accessed, ensuring you’re only paying for what you actually need. Azure FileSync works seamlessly with your existing file servers and provides automatic syncing between your on-premises and cloud environments.

3. Use Azure Data Box for Large Data Transfers

If you have large amounts of data to transfer to Azure, consider using Azure Data Box. This physical device enables you to securely transfer large datasets to the cloud without the need for extensive bandwidth. It’s a cost-effective solution for users with significant data migration needs.

by Mark | Feb 3, 2023 | Azure, Azure Blobs, Blob Storage, Comparison

Introduction

Azure Data Lake Storage Gen2 and Blob storage are two cloud storage solutions offered by Microsoft Azure. While both solutions are designed to store and manage large amounts of data, there are several key differences between them. This article will explain the differences and help you choose the right solution for your cloud data management needs.

Understanding Azure Data Lake Storage Gen2

Azure Data Lake Storage Gen2 is an enterprise-level, hyper-scale data lake solution. It is designed to handle massive amounts of data for big data analytics and machine learning scenarios. It combines the scalability of Azure Blob Storage with the file system capabilities of Hadoop Distributed File System (HDFS). It’s a fully managed service that supports HDFS, Apache Spark, Hive, and other big data frameworks. Data Lake Storage Gen2 offers the following features:

- Hierarchical namespace: Allows for a more organized and efficient data structure.

- High scalability: Can handle petabytes of data and millions of transactions per second.

- Advanced analytics: Provides integrations with big data frameworks, making it easier to perform advanced analytics.

- Tiered storage: Enables the use of hot, cool, and archive storage tiers, providing flexibility in storage options and cost savings.

Understanding Blob storage

Azure Blob Storage is a cloud-based object storage solution. It’s designed for storing and retrieving unstructured data, such as images, videos, audio files, and documents. Blob Storage is a scalable and cost-effective solution for businesses of all sizes. Blob Storage offers the following features:

- Multiple access tiers: Offers hot, cool, and archive storage tiers, allowing businesses to choose the right storage tier for their needs.

- High scalability: Can handle petabytes of data and millions of transactions per second.

- Data redundancy: Provides data redundancy across multiple data centers, ensuring data availability and durability.

- Integration with Azure services: Integrates with other Azure services, such as Azure Functions and Azure Stream Analytics.

Differences between Azure Data Lake Storage Gen2 and Blob storage

Now that we have explored the features and benefits of both Azure Data Lake Storage Gen2 and Azure Blob Storage, let’s compare the two.

Data Structure

Azure Data Lake Storage Gen2 has a hierarchical namespace, which allows for a more organized and efficient data structure. It means that data can be stored in a more structured manner, and files can be easily accessed and managed. On the other hand, Azure Blob Storage does not have a hierarchical namespace, and data is stored in a flat structure. It can make data management more challenging, but it’s a simpler approach for businesses that don’t require complex data structures.

Data Analytics

Azure Data Lake Storage Gen2 is designed specifically for big data analytics and machine learning scenarios. It supports integrations with big data frameworks, such as Apache Spark, Hadoop, and Hive. On the other hand, Azure Blob Storage is designed for storing unstructured data, and it doesn’t have built-in analytics capabilities. However, businesses can use other Azure services, such as Azure Databricks, to perform advanced analytics.

Cost

Both Azure Data Lake Storage Gen2 and Azure Blob Storage offer tiered storage, providing flexibility in storage options and cost savings. However, the storage costs for Data Lake Storage Gen2 are slightly higher than Blob Storage.

To minimise costs of both Azure Datalake and Azure Blob Storage, you can use Cloud Storage Manager to understand exactly what data is being accessed, or more importantly not being accessed, and where you can possibly save money.

Performance

Azure Data Lake Storage Gen2 offers faster data access and improved query performance compared to Azure Blob Storage. This is because Data Lake Storage Gen2 is optimized for big data analytics and can handle complex queries more efficiently. However, if your business doesn’t require advanced analytics, Blob Storage may be a more cost-effective option.

Use Cases

Azure Data Lake Storage Gen2 is an ideal choice for businesses that require big data analytics and machine learning capabilities. It’s a suitable option for data scientists, analysts, and developers who work with large datasets. On the other hand, Azure Blob Storage is best suited for storing and retrieving unstructured data, such as media files and documents. It’s an ideal option for businesses that need to store and share data with their clients or partners.

Conclusion

In conclusion, Azure Data Lake Storage Gen2 and Blob storage are both cloud storage solutions offered by Microsoft Azure. While both solutions are designed to store and manage data, there are several key differences between them, including scalability, cost, performance, security, and use cases. When choosing between Azure Data Lake Storage Gen2 and Blob storage, consider your data storage needs and choose the solution that best meets those needs.

In summary, Azure Data Lake Storage Gen2 is ideal for big data analytics workloads, while Blob storage is ideal for storing and accessing unstructured data. Both solutions offer strong security features and are cost-effective compared to traditional data storage solutions.

FAQs

Can I use Azure Blob Storage for big data analytics?

Yes, you can use other Azure services, such as Azure Databricks, to perform advanced analytics on data stored in Azure Blob Storage.

Can I use Azure Data Lake Storage Gen2 for storing unstructured data?

Yes, you can use Data Lake Storage Gen2 to store unstructured data, but it’s optimized for structured and semi-structured data.

How does the cost of Data Lake Storage Gen2 compare to Blob Storage?

The storage costs for Data Lake Storage Gen2 are slightly higher than Blob Storage due to its advanced analytics capabilities.

Can I integrate Azure Blob Storage with other Azure services?

Yes, Azure Blob Storage integrates with other Azure services, such as Azure Functions and Azure Stream Analytics.

Is Azure Storage suitable for businesses of all sizes?

Yes, Azure Storage is a scalable and cost-effective solution suitable for businesses of all sizes.

Can you reduce the costs of Azure Blob Storage and Azure Datalake?

Yes, simply using Cloud Storage Manager to understand growth trends, data that is redundant, and what can be moved to a lower storage tier.